Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> Australian National University

Australian National University

Videos

Loading the player ...

- Offer Profile

The Robotic Systems Group in the Department of Information Engineering at the College of Engineering and Computer Science follows a long standing commitment to real world robotics. It was founded in 1996 by Alex Zelinksy who is currently the director of the CSIRO Information and Communication Technology (ICT) Centre.

Current research fields are robust autonomous robots in several real world scenarios, as well as shape memory alloy actuators, rigid body dynamics as well as distributed control and communication. All details are found on the specific project pages.

There are multiple ways in which you can become involved in the activities in our group. There are always possibilities for student theses and projects. Honours, Masters, PhD applicants will find a challenging and demaning range of topics here which will bring academic knowledge in contact with physical experiments.

The group also has a tradition of commercialization projects. The up to date largest of those companies grown out of the the group is Seeing Machines which started back in the year 2000 and employs more than 50 people today.

Product Portfolio

Serafina - Small Autonomous Submersibles

-

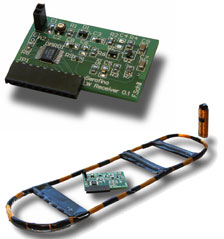

The Serafina project explores the potentials of multiple, small, fully autonomous, but

organized submersibles.

Objectives

The Serafina project at the Australian National University explores the potentials of multiple, small, fully autonomous, but organized submersibles. While being small and therefore offering only limited payloads, the full school of submersibles offers possibilities far beyond any individual submersible.Scientific goals:

- A school of submersibles which allows for fault-tolerant, scalable

coverage (in terms of exploration, searching, transportation, or

monitoring) of ocean spaces, while:

- The number of boats employed in such a school of submersibles should be open.

- Functionality of individual submersibles should be adaptive according to their current physical (relative) position in the group.

- Active and passive localization of individuals with respect to neighbouring submersibles as well as the whole group with respect to the environment.

- Dynamical communication and routing protocols, which explicitely consider and adapt the current knowledge about the physical 3-dimentional positions (including momentum and orientations) of individual stations.

- Bridging some more gaps between current dynamical systems theory and the reality of actual sensor spaces and timing constraints.

- A school of submersibles which allows for fault-tolerant, scalable

coverage (in terms of exploration, searching, transportation, or

monitoring) of ocean spaces, while:

The submersibles

-

The submersibles

- Specifications (maximum ratings):

Forward speed: > 1 m/s

Vertical speed: > 0.5 m/s

Roll speed: > 360 °/s

Pitch speed: > 180 °/s

Yaw speed: > 90 °/s

The submersibles

- Length of main hull: 400 mm

Length overall: 455 mm

Diameter of main hull: 100 mm

Width overall: 210 mm

Height overall: 140 mm

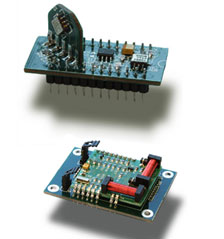

Sensor sub-systems

- Linear acceleration sensors (top)

Range (module): -1.2 .. +1.2 g per axis (adjustable, max. +/-2 g)

Sensitivity (sensor, minimal): 140 mV/g

Sensitivity (sensor, typical): 167 mV/gCompass (bottom)

Accuracy: 2 °

Resolution: 1 °

Sampling rate: 10 Hz

Hard iron calibration

Sensor sub-systems

- Pressure (top)

Pressure sensor module

Measurable pressure: 5 psi (3.5 m diving depth)

Maximum pressure: 20 psi (14 m diving depth)Sonar (bottom)

Sonar sensor module

Base frequency: 200 kHz

Sampling rate: 6 Hz

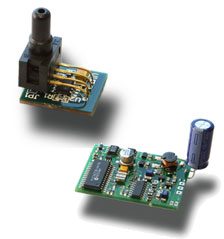

Sensor sub-systems

- Long wave radio

Carrier frequency: 122 kHz

Data rate: 1024-8192 bits/s

Range: < 6 m

Shape Memory Alloys

- Project Description:

This project aims to achieve fast, accurate, motion and force control of actuators based on shape memory alloy (SMA). Wires made from SMA can be stretched easily when cool, but contract forcibly to their original length when heated. We use thin Flexinol (tm) wires, which are made of a nickel-titanium SMA called nitinol. As the wires can only pull, we usually use them in antagonistic pairs. The big challenge is that SMAs behave in a highly nonlinear manner, and exhibit substantial hysteresis. This makes them very difficult to control. This project is therefore mainly about designing clever control systems that will deliver fast, accurate motions and forces from these tricky SMAs.

Results:- We have invented a new rapid heating algorithm that roughly doubles the speed of SMA actuators, and also protects them from being overheated.

- We have demonstrated that SMA can respond at frequencies of 1kHz and higher, by building an SMA loudspeaker. Yes, SMA really can respond at audio frequencies, and here is the audio to prove it!

- We have obtained a gain/phase model of the high-frequency dynamics of SMA. According to this model, the phase response is independent of stress and strain.

- We have developed a force control system that is accurate to better than 1mN in a +/−3N range.

Our SMA-actuated 2-DoF pantograph robot

The SMA loudspeaker

Our new testbed for force and motion control experiments

Smart Cars (In collaboration with: NICTA)

- Cars offer unique challenges in human-machine

interaction. Vehicles are becoming, in effect, robotic systems that

collaborate with the driver.

As the automated systems become more capable, how best to manage the on-board human resources is an intriguing question. Combining the strengths of machines and humans, and mitigating their shortcomings is the goal of intelligent-vehicle research.

With mobile computing already encroaching into vehicles, this project aims to develop intelligent systems and technologies that will truly aid the driver and enhance road safety.

The research is centred around road context awareness through computational vision and supplementary sensors. Road awareness is then combined with driver gaze monitoring to demonstrate holistic, intuitive driver support.

Experimental vehicle

Robust, real-time multi-cue, multi-hypothesis lane tracking.

Real-time stereo pedestrian detection.

Real-time stereo panoramic blind-spot monitoring.

Real-time stereo road object detection.

Real-time speed sign Driver Assistance System using gaze.

Road Scene visual monotony detection.

Active Vision for road scene understanding.

Human Machine Interface (Old projects -- completed more than 5 years ago)

- Stereo Tracking for Head Pose and Gaze Point

Estimation

A system has been developed which tracks the pose of a person's head and estimates their gaze-point in real-time.

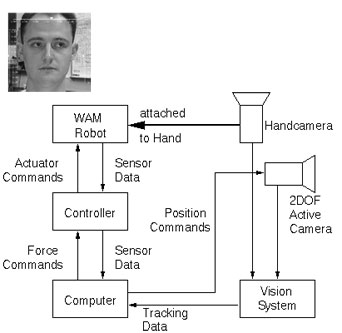

Visual Interface for Human-Robot Interaction

In this project a visual interface is developed which will allow the visual and tactile interaction between a human operator and a robot arm. Aspects of this project are real-time computer vision and open-loop force control for safe interaction between a manipulator and a person.

A Visual Interface for Human-Robot Interaction

-

Todays robots are rigid, insensitive machines used mostly in the

manufacturing industry. Their interaction with humans is restricted to

teach-and-play operations to simplify the programming of the desired

trajectories. Once programmed gates to the robot cell must be shut and

locked before the robots can begin their work. Safety usually means the

strict separation of robots and humans. This is required due to the lack of

sensors in the robots to detect humans in their environment and the

closed-loop position control which will use maximum force to achieve the

preprogrammed positions.

The exclusivity of humans and robots strongly limits the applications of robots. Only areas where the environment can be totally controlled are feasible for the use of robots. Also tasks must be completely achieved by the robot. Situations where the decision and planning capability of a supervisor is required must not arise since such help is not available due to the absence of humans.

Robotic systems that are designed to actually work together with a human would open a wide range of applications ranging from supporting high load handling systems in manufacturing and construction to systems dedicated for the interaction with humans like helping hands for disabled and elderly. Complex tasks and non-repetitive action sequences that will require supervision by human operators could be executed with the support of robots but guided by the operator (supervised autonomy). Such systems would need to have two main features that todays robots are lacking:- A natural human-robot interface that allows the operator to interact with the robot in a ``human-like'' way and

- sensors and adequate control strategies that allow the safe operation of the robot in the presence of humans in its workspace.

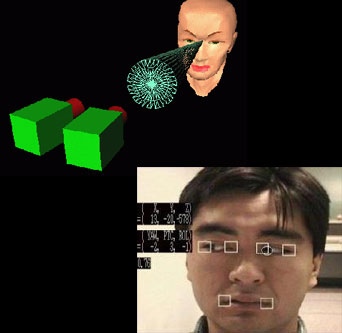

Stereo Face Tracking and Gaze Point Estimation

- Automatic tracking of the human face has been used for a

variety of applications, such as identification and gesture recognition. We

have developed a system which not only tracks the subject's face in real

time, but also estimates where the subject is looking.

As people interact face-to-face on a dailey basis they are constantly aware of where the other person's eyes are looking, indeed eye contact is a crucial part of effective communication. A computer which can 'read' our eyes and tell what we are looking at is a definite step towards more sophisticated human-machine interaction.

A vision system capable of tracking the human face and estimating the person's gaze point in real time offers many possiblilities for enhancing human-machine interaction. A key feature is such a system is its entirely non-intrusive nature, enabling people to be observed in their 'natural' state. It does not require special light shone on the target or that the target wear any special devices.

Applications include:- monitoring where drivers look while driving to provide information for improved ergonomics and safer vehicle design.

- a computer mouse could be replaced by a vision system which moved the cursor where ever the user looked on the screen, this would be particularly useful for the disabled.

- a robot could learn about a new environment from an instructor who simply looked at obstacles and identified them.

- by observing operator's movements work areas could be redesigned more ergonomically.

- a portable robotic video camera could be built to focus and film where ever the operator looked.