- Offer Profile

- The goal of the MPLab is to develop systems that perceive and interact with humans in real time using natural communication channels. To this effect we are developing perceptual primitives to detect and track human faces and to recognize facial expressions. We are also developing algorithms for robots that develop and learn to interact with people on their own. Applications include personal robots, perceptive tutoring systems, and system for clinical assessment, monitoring, and intervention.

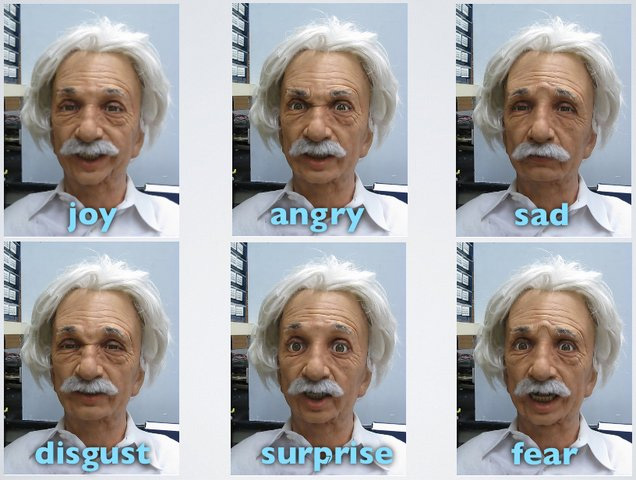

Learning to Make Facial Expression: Robot Learns to Smile and Frown

- A hyper-realistic Einstein robot at the University of

California, San Diego has learned to smile and make facial expressions

through a process of self-guided learning. The UC San Diego researchers used

machine learning to “empower” their robot to learn to make realistic facial

expressions.

Fine designed robotic faces could make realistic facial expressions. However, manually tuning of the facial motor configurations costs hours of work even for experts.

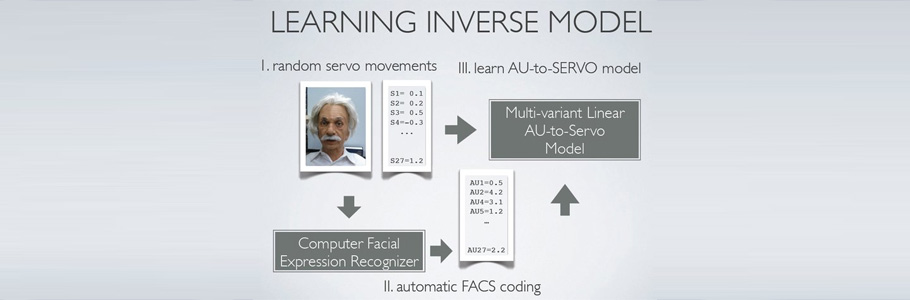

Is it possible for Robot to learn to do facial expression automatically by looking at himself?In MPLab, researchers have developed computer vision based facial expressions recognition systems that enpower the robot to understand the definition of facial expressions. In particular, we use the definition of Action Units (AU) in the Facial Action Coding System (FACS). Then the ``Einstein'' is able to adjust his ``facial muscles'' to produce facial expressions by seeing his face in a mirror.

Manually Designed Expressions:

The faces of robots are increasingly realistic and the number of artificial muscles that controls them is rising. In light of this trend, UC San Diego researchers from the Machine Perception Laboratory are studying the face and head of their robotic Einstein in order to find ways to automate the process of teaching robots to make lifelike facial expressions.

This Einstein robot head has about 30 facial muscles, each moved by a tiny servo motor connected to the muscle by a string. Today, a highly trained person must manually set up these kinds of realistic robots so that the servos pull in the right combinations to make specific face expressions. In order to begin to automate this process, the UCSD researchers looked to both developmental psychology and machine learning.

Developmental psychologists speculate that infants learn to control their bodies through systematic exploratory movements, including babbling to learn to speak. Initially, these movements appear to be executed in a random manner as infants learn to control their bodies and reach for objects.