- Offer Profile

- Our biomechatronics lab is dedicated to improving quality of life by enhancing the functionality of artificial hands and their control via human-machine interfaces.

Research areas

- Our biomechatronics lab is

dedicated to improving quality of life by enhancing the functionality of

artificial hands and their control via human-machine interfaces.

To this end, we study:

- Neuromuscular biomechanics of human grasp to elucidate patterns of reflex-like grip responses that can be used as inspiration for low-level reflexes in artificial hands

- Neuromuscular control of multiple digits during manipulation tasks

- Tactile sensor technology that can provide rich tactile feedback for use in real-time control of artificial fingertips

- Machine-learning algorithms that will enable the mapping of tactile sensor signals to features of finger-object interactions

- Control and sensory challenges for human-machine systems (e.g., brain-controlled neuroprosthesis)

- Reducing cognitive burden via sensory-event driven, low-level reflex algorithms. Such artificial reflexes could serve as “survival instincts” which buy time for human operators of robotic devices to detect, process, and command a response to perturbations.

Our research is intended to advance the design and control of human-machine systems as well as autonomous robotic systems.

Example applications include:

- Prosthetic hands for improving the independence and quality of life of amputees

- Wheelchair-mounted robot hands for increasing the workspace, independence, and quality of life of wheelchair-bound individuals

- Autonomous or teleoperated manipulators for use in harsh or limited-access environments

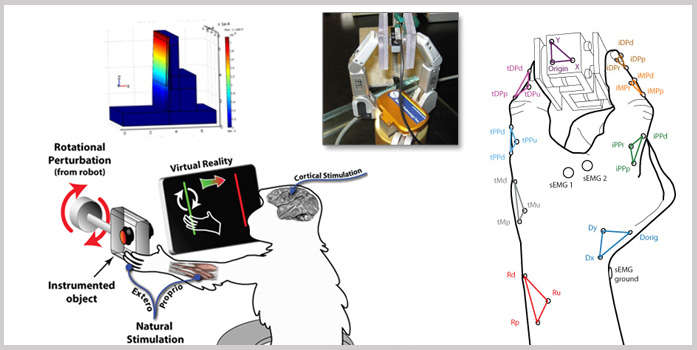

Human grip responses to rotational perturbations of grasped objects

- Experimental set-up: Motion and force data are collected

using retro-reflective markers and 6DOF load cells, respectively. Surface

EMG records the timing of the first dorsal interosseus response.

We are characterizing the reflex-like grip responses of the human hand to rotational disturbances of a grasped object. The goal is to better understand grip responses that require simultaneous coordination of adduction/abduction and flexion/extension across fingers. By better understanding patterned responses in humans, we can develop bio-inspired artificial reflexes to enhance the functionality of anthropomorphic robotic and prosthetic hands without adding to the user’s cognitive burden.

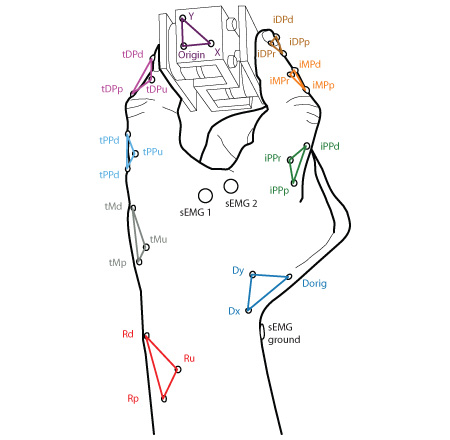

MEMS tactile sensor skin for artificial fingertips

- COMSOL simulation of one capacitive unit. The color bar

indicates displacement in meters.

The exploration and manipulation of unstructured environments via tactile feedback still presents many challenges. In collaboration with the ASU Micro/Nanofluidics Laboratory, we are developing a capacitance-based MEMS tactile sensor skin capable of detecting normal and shear forces and local vibrations. Using an array configuration and by tuning the material properties of the polymer PDMS at different sites, localized measurements with various magnitudes can be obtained simultaneously. PDMS has the desirable properties of being waterproof, chemically inert, and non-toxic. In addition, the sensor will be conformable to the curvilinear and deformable fingertip, which is crucial for performance. Computer simulations are being developed, and prototypes are under construction. Mapping of capacitance readings to external stimuli will be performed using nonlinear regression models, such as artificial neural networks.

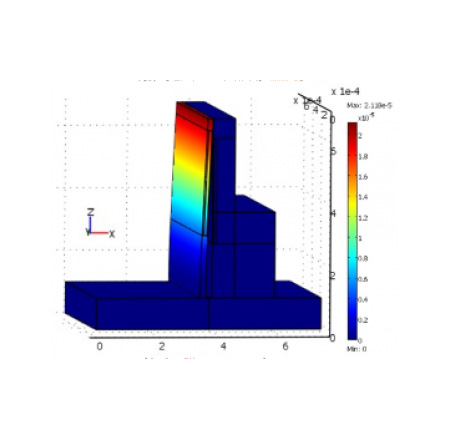

Sensory challenges for human-machine systems

- Brain-machine interface experiments.

Human users of teleoperated manipulators lack a conscious perception of rich tactile feedback, even when using devices as intimately connected to the human body as a neuroprosthesis. In collaboration with the ASU Sensorimotor Research Group, we are working to close the sensory portion of the human-machine loop. Using nonhuman primates and a sensorized robot hand, we are mapping the relationships between biological somatosensory cortex recordings and artificial tactile sensor readings. The ultimate goal is to understand how and when to stimulate the brain to provide the user with a conscious perception of tactile events occurring at an artificial fingertip.

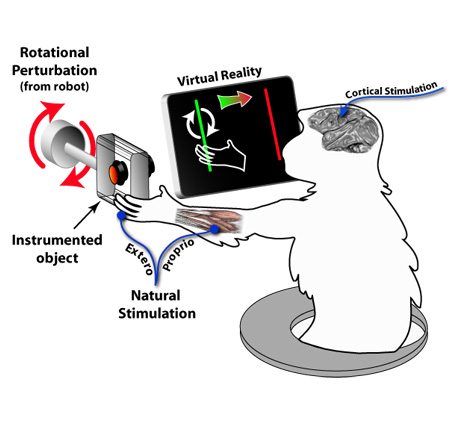

Sensory-event driven artificial reflexes

- Robot hand (Barrett Technology) using a two-fingered

precision grasp.

We are currently developing a multi-level control system that maintains voluntary control at the higher human level, but implements autonomous “survival behaviors ” at the lower machine level to address communication delays between human and machine. These survival behaviors can be implemented with sensory-event driven artificial reflexes that are inspired by human grip responses. The current research testbed uses two of three fingers on a robot hand (Barrett Technology) with flexion/extension and spread capabilities to mimic the thumb and index finger of a human hand. An anthropomorphic research testbed is forthcoming

Characteristics of a three-fingered grasp during a pouring task requiring dynamic stability

- Instrumented containers of various shapes.

We are characterizing digit control in a three-fingered grasp for a task that closely relates to an activity of daily living. The pouring task requires coordination of adduction/abduction and flexion extension degrees of freedom for successful completion. Dynamic stability is required for purposeful translation and rotation of the fluid-filled container against gravity.