- Offer Profile

The Robotics group started at the very beginning of academic activities at Jacobs University in fall 2001. Much like Jacobs itself as a novel, highly selective institution in the German university landscape, robotics is still a very young and very small but ambitious group comitted to excellence in research. The group is truly international with English as working language. Jacobs University was called International University Bremen (IUB) before 2007 [more info]; hence the two names for the same institution can be found on this website.

Jacobs Robotics is embedded in the EECS undergraduate teaching activities and in the CS graduate program SmartSystems.

The research of the group focuses on Autonomous Systems. The expertise in this field ranges from the development of embedded hardware over mechatronics and sensors to high-level software. On the basic research side of autonomous systems, machine learning and cooperation are core themes of robotics research at Jacobs University. The systems developed here are used in various domains, the most important one being safety, security and rescue robots (SSRR).

Search and Rescue Robots

- Rescue Robots: What is it all about?

Rescue Robotics is a newly emerging field dealing with systems that support first response units in disaster missions. Especially mobile robots can be highly valuable tools in urban rescue missions after catastrophes like earthquakes, bomb- or gas-explosions or daily incidents like fires and road accidents involving hazardous materials. The robots can be used to inspect collapsed structures, to assess the situation and to search and locate victims. There are many engineering and scientific challenges in this domain. Rescue robots not only have to be designed for the harsh environmental conditions of disasters, but they also need advanced capabilities like intelligent behaviors to free them from constant supervision by operators.

The idea to use robots in rescue missions is supported since several years by RoboCup, a well-known international research and education initiative in Artificial Intelligence (AI) and robotics. Already in the summer of 2000, there was a demo event at the RoboCup world championship in Melbourne, Australia where the potential of this field was demonstrated. RoboCupRescue is since then a regular part of RoboCup with dozens of teams applying to participate in the real robot and simulation competitions. The constantly increasing high popularity of this field can be explained by the strong application potential of currently existing systems, which in addition still leave substantial room for further improvements through basic research on core issues of AI and robotics.

The Robotics Group at the Jacobs University is since 2001 actively engaged in this research field. The Jacobs Robotics Rescue Robots Team features several robots, which are complete in-house designs ranging from the basic mechatronics to the high-level behavior software. Jacobs Robotics also has a special training site, the Jacobs Robotics Rescue Arena, where the robots and their operation can be tested in a simulated disaster scenario.

rugbot

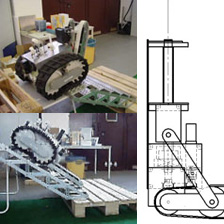

- The so-called rugbot type of robot is the latest

development of Jacobs University robotics. The name rugbot is derived from

rugged robot. This robot type is a complete in-house development based on

the so-called CubeSystem, a collection of hardware and software components

for fast robot prototyping. Rugbots are tracked vehicles that are

lightweight (about 35 kg) and have a small footprint (approximately 50 cm x

50 cm). They are very agile and fast on open terrain. An active flipper

mechanism allows Rugbots to negotiate stairs and rubble piles.

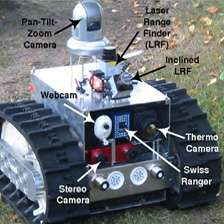

Their small footprint is highly beneficial in indoor scenarios. They have significant on-board computation power and they can be equipped with a large variety of sensors. The standard payload includes a laser-scanner, ultrasound sensors, four cameras, one thermo camera, and rate gyros. The onboard software is capable of mapping, detection of humans and fully autonomous control. Teleoperation is in varying degrees is also supported. The on-board batteries allow for 2.5 to 3 hours of continuous operation.

Locomotion

- Rugbots like many other robots designed for operation in rough environments use tracks. This type of locomotion is often considered as the most versatile locomotion system as it can handle relatively large obstacles and loose soil. The technology for tracked locomotion is well understood and simple. The positive points are smooth locomotion on relatively smooth terrain, superb traction on loose ground, the ability to handle large obstacles as well as small holes and ditches, as well as a good payload capacity. For the negative points, there is slip friction when the vehicle must turn. Also, if the locomotion has only one pair of belts, it will suffer from impacts, e.g. when climbing over large boulders 2 or when it starts going down steep slopes. Nevertheless, tracked locomotion is the most suitable to surmount obstacles, negotiate stairways, and it is able to adapt to terrain variations.

Sensors and Onboard Intelligence

- The rugbots have significant onboard computation power

and various sensor payloads. They can be teleoperated or even run fully

autonomous missions in challenging domains like rescue robotics. The

development of intelligent behaviors is in addition to the robots’

mechatronics a great challenge in this research area.

The robot must for example autonomously detect victims and hazards. Special sensors like CO2 detectors and Infrared cameras are used to detect humans. Within the thermo images, a special approach is for example used to detect human shapes.

The onboard software of rugbots also covers mapping, exploration and planning. The related research from Jacobs Robotics includes 3D mapping in unstructured environments.

Last but not least, the onboard software enables rugbots to cooperate in multi-robot-teams.

Underwater Robotics: The “Lead Zeppelin”

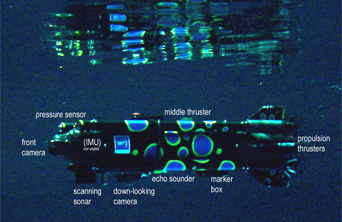

- Underwater robotics is a relatively new domain for us.

Some first successful work includes the development of the Lead Zeppelin, an

autonomous underwater vehicle (AUV).

The “Lead Zeppelin” is an Autonomous Underwater Vehicle (AUV) developed by the Jacobs Robotics Group and the Robotics Club in cooperation with ATLAS Elektronik. It is designed for basic research and education purposes. The “Lead Zeppelin” has already performed its first major test by participating at the Student Autonomous Underwater Challenge - Europe (SAUC-E) from the 3rd to the 7th of August, 2006. SAUC-E was held at Pinewood Studios near London, where a large-scale underwater stage, which is normally used for movie productions like e.g. James Bond films, was available for the competition. The Jacobs Robotics team was a real outsider among the seven participating teams, which all except us had experience with underwater robotics. Nevertheless, the Jacobs Robotics team came in second in performance.

The Jacobs Robotics “Lead Zeppelin” is to a large extent a converted land robot as a major part of its hard and software was developed for the latest generation of the Jacobs Robotics rescue robots. The most basic underwater parts, namely the hull with the motors and propellers as well as some of the sensors, were provided by ATLAS Elektronik. These parts are from a so-called Seafox submarine, which is a remotely operated vehicle (ROV), i.e., a human steers this device. The “Lead Zeppelin” got completely new electronics developed at Jacobs Robotics, a powerful on-board computer, additional sensors including cameras, and of course a large amount of software to be able to execute missions on its own. It is for example programmed to move through an environment and to avoid obstacles, to recognize targets by computer vision, and to operate at specific desired depths.

Hardware Components of Lead Zeppelin

- Some of the most basic hardware parts, namely the hull,

the motors and some of the sensors are based on parts from a so-called

Seafox ROV by ATLAS Elektronik. For autonomous operation, completely new

electronics, additional sensors, a high performance on-board computer, and

of course a lot of software was added. The "Lead Zeppelin" AUV uses two

computation units, which is a common approach for so-called CubeSystem

applications. The basic hardware control is done by a RoboCube. Higher level

AI software is running on an embedded PC. It collects all sensor data from

the RoboCube as well as from the sensors directly attached to it. On an

external operator PC, which is connected via wireless network, a GUI is used

that can be used for teleoperation and to debug autonomy. There is the

option to use an antenna-buoy to maintain wireless communication. This is of

course not needed if full autonomy is activated.

The submarine is composed of a main hull, a nose and four long tubes. Those parts house the power system, the propulsion system, a low level controller, a high level controller and a rich sensor payload.

The nose section contains:pressure sensor for depth measurement

heading sensor including pitch and roll sensor

scanning sonar head

front cameraThe middle section contains:

- echo sounder

- vertical thruster

- embedded PC

- RoboCube

- DC/DC converters

- wireless access point

Outside, below the middle section the following parts are attached to the vehicle:

- down-looking ground camera

- marker disposal system

Furthermore, four battery tubes are attached to the middle section, each containing:

- propulsion motor

- propeller with guards

- power amplifier for the motor battery

The submarine is trimmed slightly positively buoyant, i.e. it comes up to the surface in case of some error. Thus the middle thruster has to be used to force the vehicle under water. The maximum power of the four propulsion motors is 350 W of which up to 280 W are usable due to PWM duty cycle limitations. The 3-blade propellers are protected by guards for safety.

Title

- A. Heading Sensor

A MTi IMU from Xsens is used. It is a low-cost miniature inertial measurement unit with an integrated 3D compass. It has an embedded processor capable of calculating the roll, pitch and yaw in real time, as well as outputting calibrated 3D linear acceleration, rate of turn (gyro) and (earth) magnetic field data. The MTi uses a right handed Cartesian co-ordinate system which is body-fixed to the device and defined as the sensor co-ordinate system. Since the gyro is placed near the center of the sub the orientations provided by the device can directly be used as vehicle orientations. The heading sensor is used to measure and control the roll, pitch and yaw of the AUV.

B. Scanning Sonar

The submarine has a scanning sonar which enables it to scan the surrounding for obstacles and their distance by emitting sound signals of 550 kHz and measuring the time it takes until the sounds echo comes back. The sonar covers up to 360 degrees since it is being turned by a motor in steps of one degree or bigger and it has a resolution of 1.5 deg. The range of the sonar is selectable to up to 80 meters. The sensor can also scan vertically with a coverage of ± 20deg. The horizontal scan rate is about 60deg per second at distances of up to 10 m.

C. Pressure sensor

The pressure sensor measures the depth of the submarine below the water surface. It has an operating pressure range from 0 to 31 bar thus being operational to depth of 300 meters. It can be directly interfaced to the A/D converters of the RoboCube.

D. Echo sounder

The echo sounder measures the distance from the submarine to the ground by emitting sound pulses with a frequency of 500 kHz and a width of 100 µs. The echo of this sound signal is being received and the travel time measured is then used to calculate the depth. The beam width is ± 3deg, the accuracy ± 5 cm and the depth range 0.5 to 9.8 meters.

E. USB cameras

Two Creative NX Ultra USB cameras are used in the vehicle. They support a resolution of up to 640 x 480 pixel. Those USB 1.1 devices have a wide-angle lens which enables a field of view of 78deg. The camera at the bottom of the submarine looks at the ground. It can for example be used to locate targets and to guide the submarine directly over them. The front camera can be used to search for the bottom as well as the mid-water targets. Both cameras are inserted in a waterproof protective lid of transparent plastic.

The AUV Software

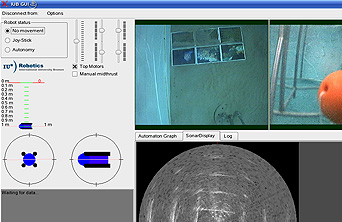

- The software for the "Lead Zeppelin" AUV is structured

like in other CubeSystem robots in two parts. The basic low level control is

done on the RoboCube. It uses the CubeOS and the RobLib to generate the

proper PWM signals, to decode the encoder signals from the motors and to

access the analog pressure and echo sound sensors. The higher level AI

software is running on the robot PC, which uses SuSE 10 as operating system.

It collects all sensor data from the cube, especially depth and speed, as

well as from the sensors directly attached to the PC’s interfaces. It uses

the high-resolution sonar head to do obstacle avoidance

and localize other objects in the basin. The front USB camera can be used to find for example midwater targets. The down-looking camera can for example locate targets situated on the bottom. The software reuses quite some functions and libraries developed for other Jacobs Robotics robotics projects, especially the rescue robots.

The AI software is embedded in a robot-server, which is a multi-threaded program written in C++. All system-wide constants like port numbers, resolutions, etc., are read at startup from a configuration file. A client GUI running on a PC or a Laptop is connecting to this server in order to manually drive the robot to a start position using a Gamepad, to start the autonomy and to observe the submarines status during the mission if wireless connection is still available. The NIST RCS framework is used to handle communication between the robot-server and the operator GUI. This framework allows data to be transferred between processes

running on the same or different machines using Neutral Message Language (NML) memory buffers. All buffers are located on the robot computer, and can be accessed by the operator GUI process asynchronously. The GUI uses Trolltechs cross-platform Qt Class Libraries. Several threads are running in parallel to handle the massive amount of data. Each camera view has its own thread.

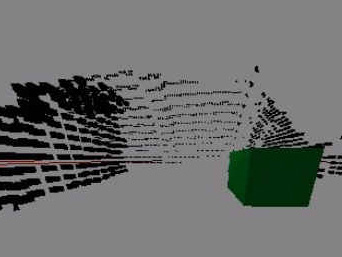

3D Mapping in Unstructured Environments

- This ongoing project is funded by the Deutsche

Forschung Gemeinschaft (DFG). It deals with the generation of 3D maps with a

mobile robot in unstructured environments like search and rescue scenarios.

The project uses a special learning approach. The goal is to a metric 3D environment representation encoded in a compact surface model language like X3D, the successor of the Virtual Reality Modeling Language (VRML). The algorithm uses a special online evolution where a local X3D neighborhood model is extracted raw 3D sensor data while the robot moves along. Inspired by previous successful work on realtime learning of 3D eye-hand coordination with a robot-arm, the evolutionary algorithm tries to generate compact model code that reproduce the vast amounts of sensor data.

In doing so, a special metric to compare the similarity of the sensor input with a candidate model is used. Unlike other approaches using Hausdorff distance or detecting correspondences between invariant features and using transformations metrics on them, this similarity function has several significant advantages. First of all, it can be computed very efficiently. Second, it is not restricted to particular objects like polygons. Third, it operates on the level of raw data points. It hence does not need any preprocessing stages for feature extraction, which cost additional computation time and which are extremely vulnerable to noise and occlusions.

Last but not least, the project tackles the localization of the robot. Instead of using standard SLAM approaches, the problem is dealt with by using map fusion. A second evolutionary algorithm generates different poses of the local VRML model with respect to the global one and measures the similarity of overlapping regions. The same special similarity metric used for the generation of the local model can also solve this task. Based on the estimations of the pose of the

robot within the global model, the initial population is seeded with according poses of the local model. In case the robot is severely lost, there is still a realistic chance that a proper integration is done due to sufficient similarity of parts of the local model with the right parts of the global model and the robot gets again correctly localized.

A typical unstructured environment: the Rescue League arena at RoboCup 2006 in Bremen.

3D model from a stationary robot

3D Wordmodeling for Robot Action Planning, Recognition and Imitation

- The project deals with the investigation of 3D world modeling and robot action planning, recognition and imitation. It is based on joined research at the Jacobs University Robotics group and the Biologically Inspired Autonomous Robots Team (BioART) lead by Yiannis Demiris at Imperial College. An other current project at Jacobs Robotics focuses on the problem of autonomously creating 3D representation of an unstructured indoor environment by a mobile robot (3D map). The architecture that is being developed at Jacobs University can extend the system designed by BioART for robot action perception and imitation by alleviating the constraints it imposes on the environment. At the same time, the 3D world modeling framework can be improved significantly by coupling it with an attentive mechanism, which is part of the architecture developed at BioART. Such exchange of knowledge will be a significant benefit for both research groups, resulting in superior performance of their currently developed systems.

Multi-Robot-Teams: Cooperative Mapping and Exploration

- Multi-robot-teams are beneficial for many application domains as a group of robots can do many tasks much more robustly and much more efficiently than a single robot. A particular good example is the field of Safety, Security and Rescue Robotics (SSRR) as demonstrated with the Jacobs rescue robots. Especially reconnaissance missions, e.g. the search for victims in a rescue scenario, can profit from several robots operating in parallel. But achieving proper cooperation is non-trivial as illustrated by the following examples of contributions by Jacobs Robotics.

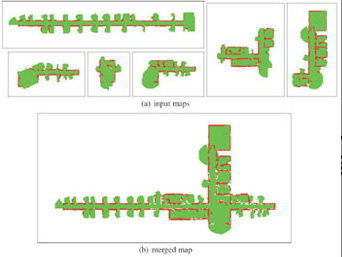

Map Merging

- Mapping can potentially be speeded up in a significant way by using multiple robots exploring different parts of the environment. But the core question of multi-robot mapping is how to integrate the data of the different robots into a single global map. A significant amount of research exists in the area of multirobot-mapping that deals with techniques to estimate the relative robots poses at the start or during the mapping process. With map merging the robots in contrast individually build local maps without any knowledge about their relative positions. The goal is then to identify regions of overlap at which the local maps can be joined together. A concrete approach to this idea was developed by Jacobs Robotics in form of a special similarity metric and a stochastic search algorithm. Given two maps m and m', the search algorithm transforms m' by rotations and translations to find a maximum overlap between m and m'. In doing so, the heuristic similarity metric guides the search algorithm toward optimal solutions.

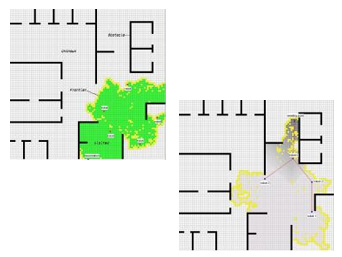

Cooperative Exploration

- A popular basis for robot exploration is the

frontier-based exploration algorithm introduced by Yamauchi in 1997. In this

algorithm, the frontier is defined as the collection of regions on the

boundary between open and unexplored space. A robot moves to the nearest

frontier, which is the nearest unknown area. By moving to the frontier, the

robot explores new parts of the environment. This new explored region is

added to the map that is created during the exploration. Obviously,

multi-robot systems are a very interesting option for exploration as they

can lead to a significant speed-up and increased robustness. There are

several possibilities to extent the frontier based algorithm to a multi

robot version. First of all, there is the option to use the most

straightforward extension; namely to let the robots run individually and to

simply fuse their data in a global map. Each robot then simply moves to its

nearest frontier cell.

More sophisticated versions try to coordinate the different robots such that they do not tend to move toward the same frontier cell. One can for example use a utility that incorporates the cost of moving to the frontiers and that discounts situations where two or more robots approach the same frontier region. An other often neglected problem for multi-robot exploration is that the robots need to exchange data. But when it comes to real multi-robot systems, communication relies on wireless networks typically based on the IEEE 802.11 family of standards, which is also known as WLAN technology, or some alternative RF technology. RF links suffer from various limitations. Especially, they have a limited range. At least, not every robot has to be within the range of each others transceiver. When using ad-hoc networking, the underlying dynamic routing can be employed to transport data via several hops of robots that are in mutual contact to bridge longer distances.

Jacobs Robotics has developed an extension of frontier based exploration, which takes constraints on the exploration like limited range of radio links into account. This algorithm is based on a utility function, which weights the benefits of exploring unknown territory versus the goal of not violating the constraints like keeping communication intact.

Virtual Robots for Research and Training Applications

- The Jacobs virtual robots are based on USARSim, a

high-fidelity simulation of large scale urban and indoor environments where

different robot platforms can operate in various scenarios. USARsim is an

extension of the Unreal Tournament game engine. The underlying Karma physics

engine provides kinematically correct robot motions, sensors from a rich

selection of standard models can be added to the robots to gather data, and

the different USARsim scenarios cover various environment conditions ranging

for example from difficult terrain as locomotion challenge to smoke from

fires as visual obstruction. The simulator is mainly laid out for Safety,

Security, and Rescue Robotics (SSRR). But it is also well suited for work on

mobile robots at large. It is an ideal tool for testing prototype

implementations of robot algorithms in general. But its main strength from a

research perspective is the possibility to investigate multi-robot teams.

From an application viewpoint, USARsim is a very beneficial tool for

training and exercising.

The virtual robots supplement the real Jacobs rescue robots. Each virtual robot runs the autonomous software developed by Jacobs Robotics, i.e., intelligent functions for perception, control, mapping, planning, cooperation, and so on.

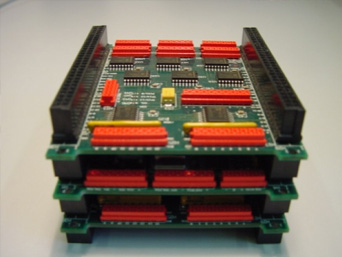

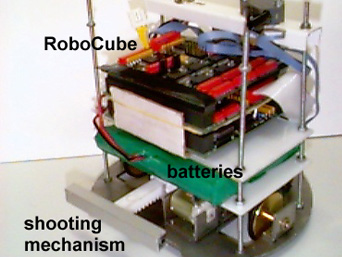

CubeSystem

- The CubeSystem is a collection of hardware- and

software-components for fast robot prototyping. The main goal of the

CubeSystem project is to provide an open source collection of generic

building blocks that can be freely combined into an application. The

CubeSystem evolved in more than five years of research and development. The

benefits of the CubeSystem can be seen by various applications in which it

is used. These applications range from educational activities to industrial

projects.

There are various other projects that deal with the development of generic hardware or software libraries for robotics. These projects are typically based on the assumption that a robot's hard- and software are two rather distinct parts, that can be easily brought together by the usage of the right type of abstractions and interfaces. This view is to quite some extent valid when it comes to the development of application software for commercial of the shelf robot hardware. But the development of a robotics application often includes the engineering of the hardware side as well. The CubeSystem therefore tries to offer a component collection for fast prototyping of complete robots, i.e., the hardware and the software side.

The most basic parts of the CubeSystem are:- the RoboCube: a special embedded controller, or more precisely controller family, based on the MC68332 processor

- the CubeOS: an operating system, or more precisely an OS family

- the RobLib: a library with common functions for robotics