Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> Karlsruhe Institute of Technology - KIT

Karlsruhe Institute of Technology - KIT

Videos

Loading the player ...

- Offer Profile

- The research group Humanoid Robots is currently working on the specification and design of humanoid components, on the development of dedicated hardware for sensory data processing and motor control as well as on the design of software frameworks, which allow for the integration in humanoid robots that are in rich in sensory and motor capabilities.

Product Portfolio

Humanoids

Integrated Humanoid Platform

- In designing our humanoid robots, we desire a humanoid

that closely mimics the sensory and sensorimotor capabilities of the human.

The robot should be able to deal with a household environment and the wide

variety of objects and activities encountered in it.

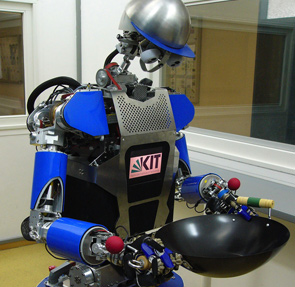

Since 1999, we have been building autonomous humanoid robots under a comprehensive view so that a wide range of tasks (and not only a particular task) can be performed. Robots of the ARMAR series (ARMAR-I, ARMAR-II, ARMAR-IIIa and ARMAR-IIIb) have been built to support grasping and dexterous manipulation, learning from human observation and natural human-robot interaction.

The research activities in this area include the specification and design of humanoid components, the development of dedicated hardware for sensory data processing and motor control as well as the design of software frameworks, which allow for the integration in humanoid robots that are in rich in sensory and motor capabilities.

ARMAR Family

The humanoid ARMAR robots were developed within the Collaborative Research Center 588: Humanoid Robots - Learning and Cooperating Multimodal Robots (SFB 588). In the year 2000, the first humanoid robot in Karlsruhe was built and named ARMAR. This humanoid had twenty-five mechanical degrees-of-freedom (DOF). It consisted of an autonomous mobile wheel-driven platform, a body with 4 DOFs, two anthropomorphic redundant arms each having 7 DOFs, two simple gripper and a head with 3 DOFs.

In the design of our robot ARMAR-IIIa in 2006, we desired a humanoid that closely mimics the sensory and sensorimotor capabilities of the human. The robot should be able to deal with household environments and the wide variety of objects and activities encountered in it. ARMAR-IIIa is a fully integrated autonomous humanoid system. It has a total 43 DOFs and is equiped with position, velocity and force-torque sensors. The upper body has been designed to be modular and light-weight while retaining similar size and proportion as an average person. For the locomotion, we employed a mobile platform which allows for holonomic movability in the application area. Two years later, a slightly improved humanoid robot, ARMAR-IIIb, was engineered.

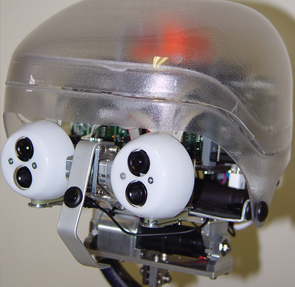

The Karlsruhe humanoid head was consistently used in ARMAR-IIIa and ARMAR-IIIb. Each possesses two cameras per eye with a wide-angle lens for peripheral vision and a narrow-angle lens for foveated vision. It has a total number of 7 DOFs (4 in the neck and 3 in the eyes), six microphones and a 6D inertial sensor. Throughout Europe, there are already ten copies of this head in use.

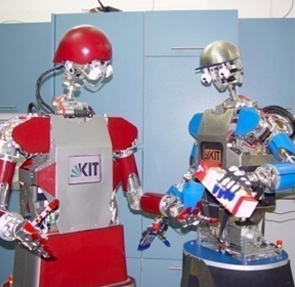

ARMAR-IIIb (left), 2008 and ARMAR-IIIa

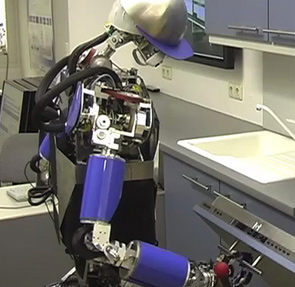

Armar 3a dish washer

The Karlsruhe Humanoid Head

Grasping and Manipulation

- Planning and execution of grasping motions

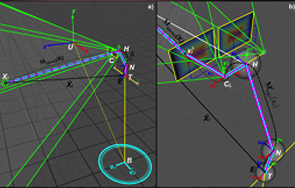

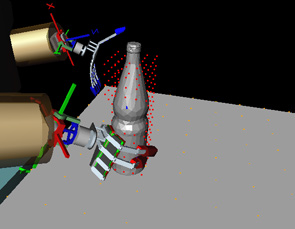

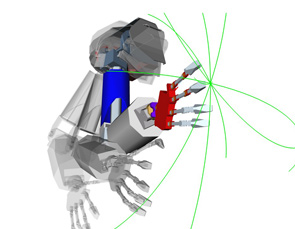

Grasping and manipulating allows a humanoid robot to interact with it's environment and thus a central component for planning and execution of grasping motions is of great importance for the robot's application in every-day environments. Therefore, we are investigating methods to endow our humanoid robots with such indispensable capabilities. We are developing integrated apporaches for the three main tasks needed for grasp and manipulation tasks: Grasp Planning, Solving the inverse kinematics for redundant manipulators and planning of collision-free motions. In particular, we address the research topics of human-inspired grasp planning, representations of grasping actions and imitation of human grasping on humanoid robots. In addition, we are working on the integration of different grasping related methods, developed in the research community by our collaborators, to endow our humanoid robots with the capabilities of grasping different classes of known and unknown objects. Since the determination of collision-free trajectories of the robot has to be done in a fast and reliable way, taking into account a changing environment ou apporaches are based on randomized algorithms, such as Rapidly Exploring Random Trees (RRT). The methods to plan collision-free motions enable our humanoid robots to grasp obejcts with one or with both hands, to re-grasp objects and to realize imanual manipulation tasks. In addition, we are investigating integrated motion planning approaches, combining the three main task of planing a grasping motion to an online planning concept: finding a feasible grasp, solving the inverse kinematics and searching the configuration space for collision-free trajectories. Futhermore multi-robot planners are developed, allowing the simultaneous execution of cooperative grasping motions. The apporaches are evaluated in simulation and on the humanoid robot ARMAR-III. To allow an robust execution of graping and manipulation motions, Visual Servoing techniques are applied for accurate positioning of the hand.

ARMAR-III opens the dishwashe

ARMAR-III opens the dishwashe

Bimanual interaction

Learning from Human Observation

- Our guiding principle to teach robots new tasks is to

take inspiration from the way humans learn new skills by imitation. Robot

learning by imitation learning, also referred to as programming by

demonstration, is the concept of having a robot observe a human instructor

performing a task and imitate it when needed. We rely on this paradigm for

robot programming as a powerful tool to accelerate learning in highly

complex motor systems, such as humanoid robots.

Main scientific issues in this research area are the capturing of human daily actions, the modeling and representation of human actions, the connection between learning low-level representations with learning high-level representations leading to generalization of different context. Furthermore, we are investigating how to combine imitation and exploration in a single interaction paradigm where imitation is not only used as a starting point for search, but where the user remains closely involved in the acquisition of new skills by evaluating new solutions experimented by the robot or by providing additional examples to accelerate the learning process when the robot is stuck in an unknown situation

Learning from Human Observation

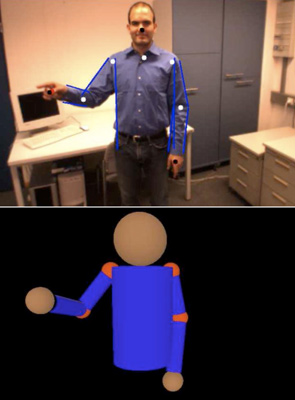

Human Motion Capture and Recognition

- Markerless human motion capture is a prerequisite for

imitation learning as well as for human robot interaction. Our research

focusses on real-time stereo-based methods that utilize the stereo camera

system of typical humanoid robot heads, having a baseline comparable to

human eye distance. As statistical framework a particle filter is used.

The listed publications illustrate the development and the advances of our approach, starting with a monocular approach, then introducing 3D hand/head tracking as a separate cue, and in our most recent work incorporating inverse kinematics, adaptive shoulder positions to allow for more flexibility of the model, and a prioritization scheme for cooperative cue fusion. Currently, we can capture 3D human motion with the head of the humanoid robot ARMAR-III in real-time with a processing rate of 20 Hz using conventional hardware. The captured trajectories are used for online reproduction.

Vision

- The research topics in this area are concerned with

on-board robot vision as the primary sensorial channel to perceive the world

and endow humanoid robots with the ability to adapt to changing

environments. Currently, methods and techniques for object recognition and

localization, self-localization, visual servoing and markerless tracking are

investigated.

In addition, we are addressing research questions associated with the use of active vision to extend the perceptual robot capabilities. Therefore, kinematic calibration methods of the active Karlsruhe Humanoid Head, open-loop and closed-loop control strategies are studied in the context of several tasks such as foveation, visual search and 3-D active vision.

Object Recognition and Localization

- Object recognition and 6-DoF pose estimation is one of the most important perceptive capabilities of humanoid robots. Accurate pose estimation of recognized objects is a prerequisite for object manipulation, grasp planning, and motion planning - as well as execution. Our research focusses on developing real-time methods for object recognition and in particular accurate pose estimation for these applications, using the stereo camera system of typical humanoid robot heads with a baseline comparable to human eye distance.

Self-Localization

- The fundamental perception capabilities of a

humanoid are:

- Self-Localization: Acquire and track the position and

orientation of the humanoid by means of active stereoscopic vision;

- Model-Based Global Localization

- Model and Appearance Based Dynamic Localization

- Environmental Status Assertion: Solve visual queries about

the status of the environment throughout task execution;

- Pose Queries: 6D pose of environmental element

- Trajectory Queries: Propercetive and perceptive trajectory for dynamic transformations

- Self-Localization: Acquire and track the position and

orientation of the humanoid by means of active stereoscopic vision;

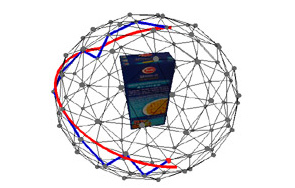

Active Visual Object Search

- Object representations are generated autonomously from an object held in the five-fingered hand. Different salient views of the held object are explored by exploiting the redundancy of the arm. The object is segmented from the hand and background based on Bayesian filtering and fusion techniques. In order to reduce the number of acquired views, we investigate solutions for determining the next best view during exploration.

Exploration

- Humanoid robots operating in human-centered

environments should be able to autonomously acquire knowledge about the

environment and the objects encountered in it as well as their physical

body.

The work in this area deals with the integration of proprioceptive and tactile information from the sensor system of the hand with visual information to acquire rich object representations of unknown objects which may enhance the recognition performance. Haptic and visual exploration strategies are investigated to guide the robot hand along the surface of potential object candidates.

In addition, we are investigating how knowledge about the robot's geometry and kinematic parameters can be learned to facilitate autonomous recalibration when the robot's physical body properties changed, especially concerning the end effector.

Tactile Exploration

- Besides grasping, there is an interest in object recognition from tactile exploration. Therefore, we have implemented a framework for visual-haptic exploration used to acquire 3D point sets from the tactile exploration process. Initially, we have chosen superquadric functions for 3D object representation and conducted experiments for fitting exploration data. In our future research, we will investigate further types of object representation suitable for object classification, recognition, and creation of grasp affordances.

Self-Exploration and Body Schema

- The hand-eye calibration by traditional means becomes nearly impossible. Humans solve the problem successfully by pure self-exploration, which has led to the adaptation of biologically-inspired mechanisms to the field of robotics. In neuroscience, it is common knowledge that there exists a body schema that correlates proprioceptive sensor information, e.g. joint configurations, with the visible shape of the body. It also represents an unconscious awareness of the current body state.