Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> University of Massachussetts

University of Massachussetts

Videos

Loading the player ...

- Offer Profile

The Laboratory for Perceptual Robotics experiments with computational principles underlying flexible, adaptable systems. We are concerned with robot systems that must produce many kinds of behavior in nonstationary environments. This implies that the objective of behavior is constantly changing as, for instance, when battery levels change, or when nondeterminism in the environment causes dangerous situations (or opportunities) to occur. We refer to these kinds of problem domains as open systems - they are only partially observable and partially controllable. To estimate hidden state and to expand the set of achievable control transitions, we have implemented temporally extended observations and actions, respectively. The kinds of world models developed in such systems are the product of native structure, rewards, environmental stimuli, and experience.

We also consider redundant robot systems - i.e. those that have many ways of perceiving important events and many ways of manipulating the world to effect change. We employ distributed solutions to multi-objective problems and propose that hierarchical robot programs should be acquired incrementally in a manner inspired by sensorimotor development in human infants. We propose to grow a functioning machine agency by observing that robot systems possess a great deal of intrinsic structure (kinematic, dynamic, perceptual, motor) that we discover and exploit during on-going interaction with the world.

Finally, we study robot systems that collaborate with humans and with other robots. A mixed-initiative system can take actions derived from competing internal objectives as well as from external peers and supervisors. Part of our goal concerns how such a robot system can explain why it is behaving in a particular way and can communicate effectively with others.

Product Portfolio

Robots

Research Robots

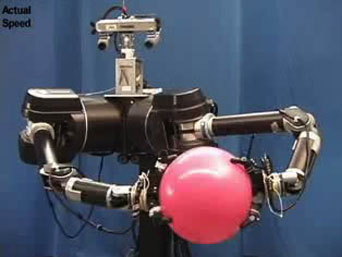

Dexter

- Dexter is a platform for studying bi-manual dexterity designed to help us study the acquisition of concepts and cognitive representations from interaction with the world.

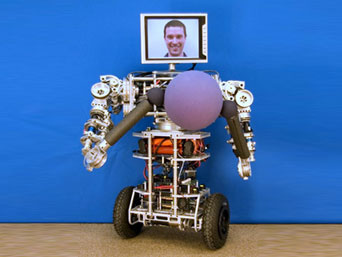

uBot-0,5 - uBot-5

- The 2005 final report for the NSF/NASA Workshop on Autonomous Mobile Manipulation identified mobile manipulation technology as being critical for next generation robotics applications. It also identified a need for appropriate research platforms for mobile manipulation. We predict that the vast majority of interesting and useful mobile manipulation applications will require acquiring, transporting, and placing objects– so-called “pick-and-place” tasks. To address this need the uBot-5 was built.

Segway RMP

ATRV

- The ATRV series robots, manufactured by iRobot Co. are four-wheeled mobile platforms equipped with sonar sensors and wireless ethernet communications. LPR currently has an ATRV-Jr. and an ATRV-mini. We have attached a panoramic camera to the ATRV-Jr, and a Dell Inspiron 8200 laptop for control.

Research Objectives

Control Basis

- Learning in the Control Basis

Machine learning techniques based on Markov Decision Processes (MDPs?) like reinforcement learning (RL) are employed to learn policies for sequencing control decisions in order to optimize reward. The learning algorithm solves the temporal credit assignment problem by associating credit with elements of a behavioral sequence that lead to reward. However, RL depends on stochastic exploration and our state space could be enormous for interesting robots. Moreover, any algorithm that depends on completely random exploration will take a long time, and will occassionally do something terribly unfortunate to learn about the consequences. Below are a number of learning examples in the Control Basis framework.

Grasping and Manipulation

- Grasp planning for multiple finger manipulators has proven to be a very challenging problem. Traditional approaches rely on models for contact planning which lead to computationally intractable solutions and often do not scale to three dimensional objects or to arbitrary numbers of contacts. We have constructed an approach for closed-loop grasp control which is provably correct for two and three contacts on regular, convex objects. This approach employs "n" asynchronous controllers (one for each contact) to achieve grasp geometries from among an equivalence class of grasp solutions. This approach generates a grasp controller - a closed-loop, differential response to tactile feedback - to remove wrench residuals in a grasp configuration. The equilibria establish necessary conditions for wrench closure on regular, convex objects, and identify good grasps, in general, for arbitrary objects. Sequences of grasp controllers, engaging sequences of contact resources can be used to optimize grasp performance and to produce manipulation gaits . The result is a very unique, sensor-based grasp controller that does not require a priori object geometry.

Programming by Demonstration

- The remote teleoperation of robots is one of the dominant modes of robot control in applications involving hazardous environments, including space. Here, a user is equipped with an interface that conveys the sensory information being collected by the robot and allows the user to command the robot's actions. The difficulty with this form of interface is the degree of fatigue that is experienced by the user, often within a short period of time. To alleviate this problem, we are working with our colleagues at the NASA Johnson Space Center to develop user interfaces that anticipate the actions of the user, allowing the robot to aid in the partial performance of the task, or even to learn how to perform entire tasks autonomously.

Knowledge Acquisition

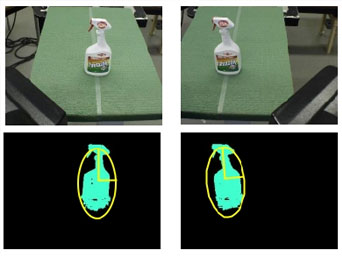

- Learning About Shape

One embellishment that Dexter learned for his pick and place policy was how to preshape grasps. The first and second moments of foreground blobs were used to inform, probabalistically learn approach angles and offsets from object center. This image shows Dexter's visual representation of an object placed on the table.