- Offer Profile

Established in 1986, the Institute for Robotics and Process Control

(iRP) has become one of the leading robotics research labs in Germany.

Research at the iRP focuses mainly on three fields:- Industrial robotics

- Medical robotics

- Computer vision

The iRP is involved in several R&D projects with international research

organizations and industrial partners.

Robotic - Basics: MiRPA - Middleware for Robotic and Process Control Applications

-

In cooperation with Volkswagen AG.

The realization of modular and distributed software systems can be simplified by using middlewares. As commercial middleware solutions do not meet hard real-time requirements, their usage has been limited in control applications in the past. We have conceived and realized the new middleware MiRPA (Middleware for Robotic and Process Control Applications). This message-driven middleware implementation has been designed for meeting special demands in robotic and automation applications. With MiRPA it is possible to design very modular and open control systems. Figure 1 shows an exemplary setup of a control system for a six-joint industrial manipulator.

All installed modules only have one communicate partner: the middleware. Once interfaces have been defined modules can be added and/or exchanged even during runtime, which makes this software solution very attractive for research purposes. New sensors and controllers can be integrated easily without changing the core of the control system. Rapid control prototyping becomes significantly simplified due to an interface to Matlab/Simulink. Models from Matlab can be executed as MiRPA-module on the real-time target system.The usage of MiRPA is not limited to the field of robotics. Every time a modular, scalable, and flexible real-time system is desired, MiRPA brings in its great advantages.

Figure 1: MiRPA-based software architecture of a robotic control system.

Figure 2: Hardware architecture corresponding to Fig. 1.

Robotic - Basics: On-Line Trajectory Generation

- This work is motivated by the desire of integrating

sensors in robotic environments. The generation of command variables for

robtic manipulators has two functions: specification of the geometric path

(path planning) and specification of the progression of position, velocity,

acceleration, and jerk in dependence of time (trajectory planning). The

literature provides a very rich set of approaches and algorithms in both

fields, which can be subdivided into many sections. But there is no approach

at all, which enables the generation of trajectories starting from any state

of motion. This is one very essential requirement when integrating sensors

into robot work cells with the aim of realizing sensor-guided and sensor

guarded motions. The robot has to react on sensor events within one control

cycle only, and hence trajectory parameters can change arbitrarily. To

comply with these demands the trajectory generator must be able to handle

and proceed with arbitrary input values. Furthermore, its output values have

to result in a jerk-limited, time-optimal, and synchronized trajectory.

The Approach of Decision Trees

Decision trees are used as basis for the approach to realize on-line trajectory generation for N-dimensional space with arbitrary input values (Fig. 1) and synchronization between all degrees of fredom as shown in Fig. 2. The figure illustrates a simple case for third-order on-line trajectory generation with and without synchronization for three degrees of fredom. In correspondence to Fig. 1 a function, which maps the 8N-dimensional space onto 3N-dimensional space must be specified (with N = 6 for Cartesian space). Defining this function is the major part of this reasearch work. Once defined it would result in the classical trajectory progression with rectangular jerks as shown in Fig. 3, which depicts the most trivial case of a third-order trajectory.

Figure 1: Input and output parameters for a third order on-line trajectory generator.

z is the variable of the z-transformation. Its inverse represents a hold element.

Figure 3: Position, velocity, acceleration, and jerk of a simple 7-phase jerk-limited trajectory.

Figure 2a: Position and velocity diagrams showing the difference between time-synchronized trajectories and non-synchronized trajectories.

Figure 2b: Position and velocity diagrams showing the difference between time-synchronized trajectories and non-synchronized trajectories.

Robotic - Basics: Symbolic Computation of Inverse Kinematics

- During the last years fundamental problems in the field of the inverse kinematics (IK) of serial link robots have been investigated within a research project funded by the German Research Foundation DFG. We have developed methods to automatically generate the inverse kinematic equations of many classes of serial link robots. An appropriate computer program SKIP (Symbolic Kinematics Inversion Program) has been implemented and evaluated by hundreds of serial kinematics. SKIP computes the closed form solution for a given kinematic by using a set of prototype equations with known a priori solutions. The costs for the inversion are reduced drastically by a careful equation analysis leading to powerful equation features. The figure below gives an overview about the computational flow and the data flow in SKIP.

Control: Multi-Sensor Integration

- Playing Jenga

To demonstrate the potential of multi-sensor integration in industrial manipulation, a robot was programmed to play Jenga. The aim of this game is to find a loosen block in a tower of wooden cuboids, take it out and put it back onto the top of the tower. The manipulator is equipped with two cameras. One PC is dedicated for image processing and calculates the positions in space for all cuboids on-line. For tactile feedback, a six degree of freedom force/torque sensor and a six degree of freedom acceleration sensor are mounted between hand and gripper. For precise position measurements, an optical triangulation distance sensor is mounted on the gripper. Randomly, a block is chosen and the manipulator tries to push it out of the tower. If the counter force gets to high or if the cameras detect a dithering tower, the next cuboid will be chosen. Once a block could be pushed far enough, its contour is precisely surveyed by the distance sensor. Now the block can be gripped exactly centered, such that the tower will not move when closing the gripper. In order not to damage the tower, all transversal forces and torques are eliminated while pulling the brick out. To put a brick back onto the tower, a force guarded manipulation primitive is set up, which lets the manipulator stop, when a certain force is exceeded. The whole application is programmed on the base of manipulation primitives, which constitute atomic motion commands. Once the execution of a single primitive is finished, it depends on the sensor signals, which primitive will be executed next. This way a program can be summarized to a static manipulation primitive net. The path trough the net changes dynamically and depends on the situation in the work cell. At the end of each game, the tower collapses. The record height was 28 stages that means 10 additional stages consisting of 29 blocks were put onto the top of the tower.

Industrial Robotics: Automatic Planning and Execution of Assembly Tasks

-

This project aims at developing methods for automated robot programming.

While market of products is changing rapidly, costs for installation of

production lines increases dramatically. Thus, a strong demand arises for

programmable flexible tools which support the robot programmer. Our aim is to

develop a CAD-based interface for robot programming, such that a programmer may

give instructions like 'put the object onto plane' by just clicking on

appropriate surfaces in a virtual environment. The generation of robot programs

should be done automatically by such a system, the time and cost expensive

teaching should become redundant.

System Overview

Fig. 2 describes the system, which has been developed in our institute. In the first step the assembly group is specified using symbolic spatial relations (Fig. 3). The user only has to click on the appropriate surfaces to do this. Possible contradictions and errors produced by the user can automatically be detected by the system.

After specification of assembly groups, assembly sequences are generated applying the assembly-by-disassembly strategy.

After determining the assembly sequence a collision free path planner has to be applied.

Furthermore, the assembly operations have to be transformed into appropriate skill primitive nets. A skill primitive net consists of skill primitives arranged in a graph, where the nodes represent the skill primitives and the edges are annotated by entrance conditions. Each skill primitive represents one sensor based robot motion.

With this concept, many different sensors can be used simultaneously. Currently we have employed cameras and force torque sensors.

Planning of such processes is carried out in virtual environment, hence displacements between real world and virtual world may occur. Theses displacements are treated by skill primitive nets successfully. Therewith a system for planning, evaluation, and execution of assembly tasks is provided.

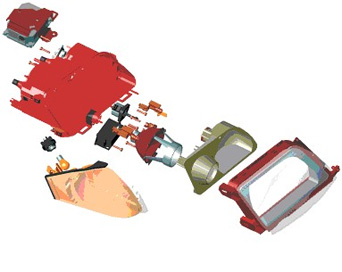

Fig. 1: The automotive headlight assembly

- Illustrates a complex aggregate, an automotive headlight assembly consisting of more than 30 parts. The geometric description of parts is available. Also the complete product is specified; that means each object has its goal position defined. First of all, assembly sequences are generated for the product.

Fig 2: An overview of the entire system from specification up to execution

Fig 3: The specification tool for the definition of symbolic spatial relations integrated into a commercial robot simulation system

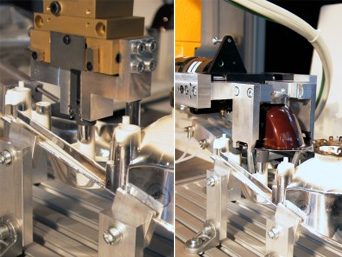

Fig. 6: Sensor based execution of assembly tasks

Mobile Robots: MONAMOVE - a flexible transport system for manufacturing environments

- MONAMOVE:

MOnitoring and NAvigation for MObile VEhicles

The flexible and automated flow of materials, e.g. between different work cells and a computer controlled warehouse, becomes more and more important in modern factory environments. To obtain such a flexibility it is obvious to use autonomous guided vehicles (AGV). Many of the autonomous guided vehicle concepts, which are known from literature, use highly specialized on-board sensor systems to navigate in the environment. In contrast to these concepts, the flexible transport system MONAMOVE proposed by us uses only simple, low-cost on-carrier sensors in combination with a global monitoring system and a global navigation system. This combination of global monitoring and global navigation enables the carriers to navigate without any fixed predefined paths.

Mobile Robots: Statistical Motion Planning for Mobile Robots

- In real environments motion planning for mobile robots

generally has to take into account the presence of moving obstacles. Two

types of approaches coping with this problem prevail: 1. Obstacle motions

are presumed to be known exactly; then, a collision-free robot trajectory

can be planned, e.g., in configuration-time space. 2. Obstacles are ignored

until they are close to the robot; during its motion the robot reacts by

performing evasive movements. Both approaches have some drawbacks due to

making presumptions which do not properly reflect reality: Usually, obstacle

motions cannot be predicted precisely, yet some information about average

behavior of obstacles can be obtained easily. Thus, the former approach is

mainly of theoretical interest, while the latter may be quite inefficient as

it uses local information only. Consequently, we have developed a new

concept which incorporates statistical data in order to respect obstacle

behaviors: statistical motion planning. It yields efficient robot paths

which are adapted to the prevailing motions of obstacles. Furthermore, this

approach realizes a fundamental issue in robotics: The robot is adapted to

its environment, (and not vice versa), the environment is minimally

disturbed by the robot.

Even in simple situations, the selection of optimal robot paths depends on many factors (e.g. obstacle density, direction of motion, velocities). Mathematical models are thus a crucial foundation for statistical motion planning. To describe obstacle motions, two models -- differing in precision and complexity -- have been developed: Stochastic trajectories permit a precise evaluation of robot paths with respect to collision probability and expected driving time (which takes into account that the time to reach the goal also depends on the costs for non-deterministic evading maneuvers). The stochastic grid is a simpler representation, which is used in order to plan robot trajectories with minimum collision probability.

The paths generated by the statistical methods have been evaluated and compared to results obtained with a conventional planner, which minimizes the path length. Naturally, the statistically planned paths are longer as they purposely incorporate detours. In dynamic environments, however, the detours allow to significantly decrease collision probabilities and the expected driving time compared to the conventional trajectories.

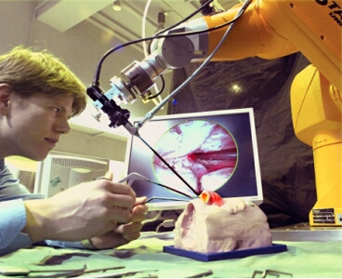

Robotics in Surgery: Robot Assisted Endoscopic Sinus Surgery

- Problem Description

Today most operations in nasal sinuses (caves in the skull near the nose), for example the removal of adenoids, are accomplished minimal-invasively through the natural opening of the nose with the help of an endoscope. The endoscope acts as a light source in one direction and a camera in the other direction. The surgeon, holding the endoscope in one hand, does not see the place of operation directly but a camera picture of it on a screen. In the other hand she/he usually holds an instrument, e.g. a special knife used to cut away tissue. Normally, this causes some bleeding. So a sucker (another instrument) is needed to remove the blood and the cut tissue from the nose. The surgeon often switches between these instruments during an operation. This increases the duration of the operation and makes the process cumbersome and unergonomic for the surgeon.Project Description

Within the scope of an cooperative research project with the Klinik und Poliklinik für Hals-Nasen-Ohrenheilkunde/Chirurgie of the "Rheinische Friedrich-Wilhelms-Universität Bonn" we are investigating methods that allow a robotic manipulator to guide an endoscope during an endonasal operation completely autonomously. The objective of the project is an intelligent guidance of the endoscope that fulfils the following requirements:- The tip of a selected instrument is always in the center of the camera view.

- The endoscope is placed in such a way that the surgeon has as much as possible free space for his own movements.

- Critical structures of the patient (e.g. brain, eyes) have to be avoided and fragile structures have to be touched carefully and only if necessary.

A robotic manipulator takes the guidance of the endoscope

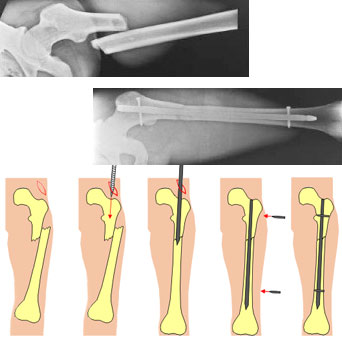

Robotics in Surgery: Reposition of Femoral Shaft Fractures

- Problem Formulation

In this research project methods for robot assisted fixation of femoral (the human thigh bone) shaft fractures are developed and evaluated in cooperation with the Department of Trauma Surgery of the Hannover Medical School. Those fractures are often a result of high energy traumas like traffic accidents and are nowadays usually fixed with a so-called intramedullary nail. The x-ray images below illustrate such a fracture, which is stabilized with a nail.

The quality of the operation result can be measured by two parameters: The lenght of the leg and the rotation about the leg axis. Depending on the type of fracture, the precise re-establishment of these parameters can be very difficult to achieve by the surgeon. If these parameters deviate too much from the physiologically correct values, a second correction operation might become necessary.Project Goals

The primary goal of this research project is the development and evaluation of computer and robot assisted methods in order to support this challenging surgical procedure. With the combination of image analysis, force/torque guided robot control, and preoperative planning and simulation, the achievable reduction accuracies should be increased.Pose Estimation of Cylindrical Objects for a Semi-Automated Fracture Reduction - Summary

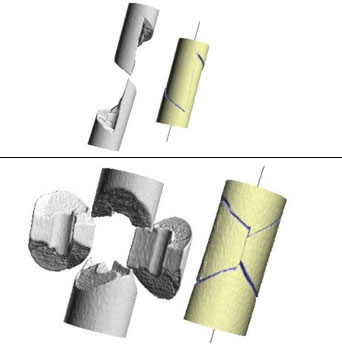

Below we present the results of our method for computing the relative target transformations between broken cylindrical objects in 3d space.

We first compute the positions and orientations of the axes of every cylindrical object. This is achieved by a specially adapted Hough transform. These axes are the most important attributes for the segmentation of fractured bones and can also be used as an initial pose estimation (constraining 4 of the overall 6 degrees of freedom of the reduction problem).

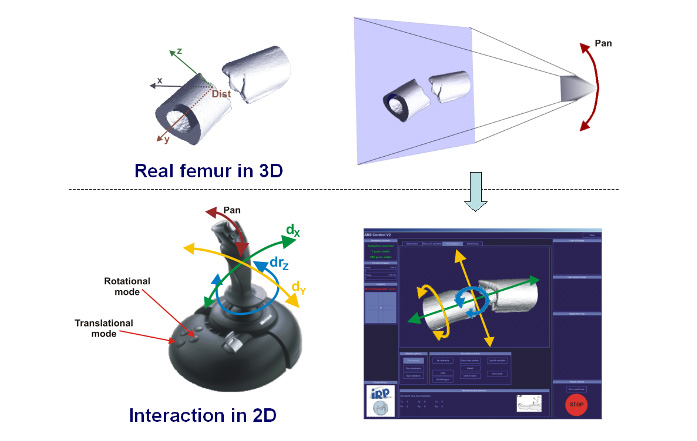

After these preprocessing steps, the relative transformation between corresponding fracture segments can be computed using well-known surface registration algorithms. Here we are using a special 2D depth image correlation and a variant of the ICP (Iterative Closest Point) algorithm. A project goal is using these methods for computing the target poses of bone fragments in order to allow for a computer assisted semi-automated fracture reduction by means of a robot.Fracture Reduction using a Telemanipulator with Haptical Feedback - Summary

We developed a complex system, which allowed to use a robot as telemanipulator for supporting the fracture reduction process. Our robot is a standard industrial Säubli RX 90 robot. The robot is controlled by the surgeon by means of a Joystick with haptical feedback. Intraoperative 3D imaging of the fracture is the base information for the surgeon during reduction. These 3D volume images are automatically segmented by the PC resulting in highly detailed surface models of the fracture segments (cp. the figure below), which can be used by the surgeon to precisely move the fragments to the desired target poses. An optical navigation system ensures that the 3D scene presented on the PC display is always in accordance with the real surgical situation; the virtual 3D models always move in the same way as the real bone fragments, which are moved by the robot.

All forces and torques acting in the operation site can be measured by means of a force/torque sensor mounted at to robots hand. These forces are fed back to the joystick. This way, the surgeon is able to feel the forces acting on the patient because of distracted muscles or contacts between the fracture segments.Results

In a first test series, the telemanipulator system was evaluated in our anatomy lab using broken human bones (without surrounding soft tissue). It could be shown that reduction accuracies with mean values of about 2° and 2mm can be achieved for simple fractures. Even for complex fractures the achievable accuracy stays below 4°. From a clinical point of view, these values are more than acceptable.

Furthermore, the telemanipulator system was also tested on human cadavers; complete specimens with intact soft tissues around the broken bone. The results have been similar to those outlined above. In addition we could show that to telemanipulated reductions achieve significantly higher reduction accuracies than manual reductions, which have been performed by an experienced surgeon on the same fractures.Conclusion

The presented form of visualization and interaction with a telemanipulator system for fracture reduction in the femur turned out to be efficient and intuitive. All test persons have been able to perform reliable reductions with high reduction accuracies after only a short time of learning. These results clearly show the potential of robotized fracture reduction, which will ensure high quality outcomes of such operations in the future.

Top: X-ray image of a broken femur [Source: AO Principles of Fracture Management]

Bottom: The course of an intramedullary nailing operation

Top: Spiral fracture

Bottom: Complex Fracture

The interaction principle of a 3D telemanipulated fracture reduction

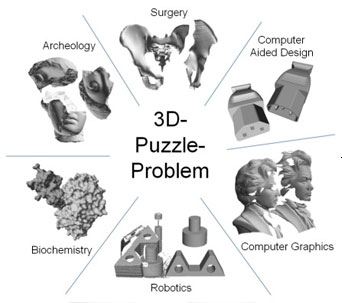

3D-Puzzle-Problem

-

The reconstruction of three-dimensional fragmented

objects (3d-puzzle-problem) is a highly relevant task with many

applications. The field of application comprises e.g. archaeology,

surgery, bioinformatics, computer graphics and robotics. Examples are

the reconstruction of broken archaeological artefacts, human bone

fracture reduction in surgery, protein-docking, registration of

surfaces, and the assemblage of industrial components.

This project considers the whole processing chain, starting from data acquisition with different sensors, the general registration of surfaces, up to special requirements for matching fragments in different applications. In this context, novel and efficient pairwise matching approaches have been developed, which are highly robust against measurement inaccuracies, material deterioration and noise. In their basic configuration, both methods search for a relative pose, where the surface contact between all fragments is as high as possible.

Furthermore, a priori knowledge of the broken objects (like shape priors, mirror symmetries and symmetry axes) can be used to increase the efficiency, accuracy and robustness.

* funded by the German Science Foundation (DFG).

Intelligent Room

-

At our institute we are developing new visual

monitoring systems for elderly persons. Our long-term aim is a

monitoring system that allows elderly person to live a long, independent

and secure life in their home environment. The monitoring system do not

care about nursing tasks, instead the daily life is affected as less as

possible ensuring the health of the person. Using cameras and image

processing techniques have the advantage of being invisible for the

user. Moreover, no interaction between the user and the system is

needed.

A first version of the system is already runnable and currently tested in a real home environment. This version uses a fish-eye camera mounted at the ceiling of the room and can automatically detect falls. When a fall is detected the system can make an emergency call. We are using different model-based and modelfree approaches for the tracking and the recognition of falls. The following figures illustrate a blob-based approach, where the different body parts are modeled as color blobs.Using fish-eye cameras have the advantage of mapping the whole room onto just one image. A single pinhole-like or pan-tilt-zoom camera can only map a part of the room.

To detect falls at night we are integrating active approaches. Infra-red lights are mounted at different locations in the room, preferred at the ceiling. The shadow information is used to distinguish between a standing and a lying person. As can be seen in the following figures the shadow of a standing person is much bigger than the one of a lying person.

Till now we just consider fall detection, but of course fall prevention is another challenging task. Changes of the gait can be due to diseases and can result in a fall. Visual fall prevention allows the detection of these changes and e.g. the notification of the general practitioner.

This work has been supported by the Deutsche Telekom which is kindly acknowledged

Standing person

Lying person

Shadow simulation of a standing person

Driver Assistance: Rear-PreCrash

- Video sensors have become very important for

automotive applications due to the ongoing cost reductions of cameras and

technical advances in image processing hardware. Some series vehicles are

already equipped with a monocular rear-camera as the only sensor directing

backwards, but the high capabilities of this camera for assistance systems

are still unexploited. E.g. one desired application is a sensor system for

time to collision estimation, which is motivated by the high frequency of

road traffic rear-end vehicle collisions. The severity of resulting

passenger injuries may be reduced by an onboard system that estimates the

time of possible rear-end crashes leading to immediate preparations e.g. by

moving each headrest in an optimal position or by tightening the seat belts.

In cooperation with the Volkswagen we are investigating the feasibility of reliable real-time vehicle detection and time to collision estimation with rear cameras. The task of iRP in this project is to develop the video sensor software that delivers state information about approaching vehicles to the actuating safety elements provided by VWMethods

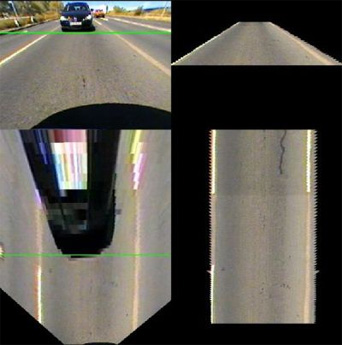

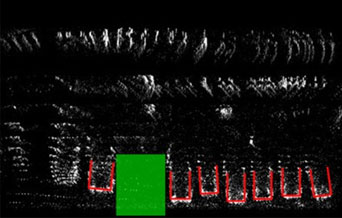

In many of our approaches for vehicle detection we use a top-down-view that is generated by projecting the camera pixels via Inverse Perspective Mapping (IPM) onto the street as can be seen in the image below and in the corresponding video. In this view we are looking down onto the street plane at right angle. Thus, no perspective mapping has to be considered for the distance calculation of two arbitrary points of the street plane, which simplifies processing of many algorithms.

One of our approaches uses this top-down-view to generate a street texture that describes the expected appearance of the street. In the figure below (a video is also available) you can see the generated street texture in the right column and the source images in the left column. The top row shows the view as seen from the camera and the bottom row shows the corresponding top-down-view. Source image and street reference texture are compared in order to detect approaching vehicles indicated in the figure below and in the video with a green line.

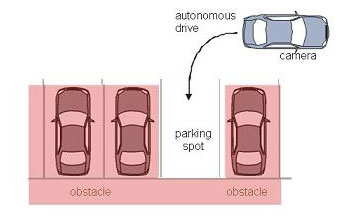

Driver Assistance: Parking spot detection by digital image analysis

-

Introduction

More and more cars are equipped with driver assistance systems (DAS). They provide the driver with information and support and thus can help to prevent accidents and contribute to safer traffic. Some of these systems have been implemented and are in use successfully for many years, like ABS or ESP. Others, like automatic lane detection or infrared vision, are just being developed and installed in newer cars. Modern DAS are designed to simplify driving or to increase the driver's comfort and reduce fatigue. One of these modern systems is the parking assistant, which automatically maneuvers the car into a parking spot. But before actually starting the parking process, a parking spot has to be detected. In State-of-the-art systems, the driver himself has to locate a spot, then ultrasonic sensors are used to measure its size. In cooperation with the Volkswagen AG, we are developing a vision-based system that is able to automatically locate parking spots. Compared with ultrasonic sensors, cameras have great advantages due to their wide field of possible applications. With the help of image processing, almost every desired information can be extracted from camera images. Furthermore, cameras have a big range of sight, allowing gathering data even at great distances. Our system uses cameras with fisheye lenses and structure from motion to attain information about the surrounding area. The collected data is then interpreted to locate parking spots.

Setup and Methods

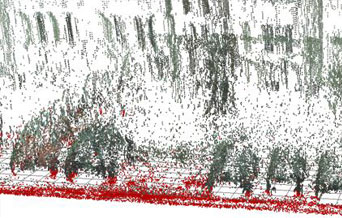

Fisheye cameras have the advantage of delivering a large field of view. It is possible to get a 180° view with just one camera. Therefore two cameras suffice to cover the area on the left and right side of a moving car. They were installed in the side mirrors of a teste vehicle provided by Volkswagen. The following picture shows an example of a right view.As we only have one camera at each side, we can not use stereo vision to gather 3D information. But because the car is moving, we can use structure from motion. This technique uses two views of a scene from different viewing angles to triangulate points in the 3D world and calculate their exact position. The result is a 3D scatter diagram of the passed area.

Results

An example for such a scatter diagram can be seen below (image: 3D Scatter diagram). Every point in this diagram represents a real 3D point. Points at the street level are colored red, obstacles (= points above the street level) are displayed in black. If every 3D point is projected on its corresponding point in the ground plane, a top down view is created which shows the whole scene as seen from above. Now patterns become visible which can clearly be identified. Cars, for example, build a pattern shaped like the letter "U". If some of these can be found in the top down view, the positions of cars are clearly recognized. Free parking spots are now detected by searching for free space between these cars. For an illustration of the process, see the following image. Recognized cars are highlighted in red, free space is marked green (image: Topdown view of the scene). If a parking space is found, the automated parking process can be initiated.Experimental vehicle: Paul

The current experimental vehicle Paul (German: "Parkt allein und lenkt") uses our vision-based parking spot detection. Paul was presented at the Hannover fair 2008 and demonstrates Volkswagen's Park Assist Vision system.

Right view with a fisheye camera

3D Scatter diagram

Topdown view of the scene

Paul at the Hannover fair 2008

Robotic Systems for Handling and Assembly (SFB 562)

- The Institute for Robotics and Process Control

participates in the Collaborative Research Center 562 "Robotic Systems for

Handling and Assembly", which is chaired by Prof. Dr.-Ing. Wahl.

The aim of the Collaborative Research Center 562 is the evolution of methodical and component related fundamentals for the development of robotic systems based on closed kinematic chains in order to improve the promising potential of these robots, particularly with regard to high operating speeds, accelerations, and accuracy.

To reduce the sequence time for handling and assembly applications the most essential goal is to improve operating speeds and accelerations in the working space for given process accuracy. By using conventional serial robot systems these increasing requirements end in a vicious circle. Under these circumstances the request of new robotic systems based on parallel structures is of major importance.Owing to their framework construction by rod elements, which are poor in mass, parallel structures offer an ideal platform for an active vibration reduction. The integration of these adaptronic components with special adaptive control elements is a promising effective way to make robots both, more accurate and faster and consequently more productive.

The basic topics in the Collaborative Reserach Center 562 are:- Design and modeling of parallel Robots

- Robot control and information processing

- New components for parallel robots

participating "institutes":

- Institute of Machine Tools and Production Technology (IWF) (www.iwf.ing.tu-bs.de)

- Institute for Programming and Reactive Systems (IPS) (www.ips.cs.tu-bs.de)

- Institute for Microtechnology (IMT) (www.imt.tu-bs.de)

- Institute of Control Engineering (IfR) (www.ifr.ing.tu-bs.de)

- Institute for Engineering Design (IK) (www.ikt.tu-bs.de)

- Institute of Computer and Communication Network Engineering (IDA) (www.ida.ing.tu-bs.de)

- Institute of Composite Structures and Adaptive Systems (www.dlr.de/fa)

HEXA II

TRIGLIDE

PARAPLACER

FÜNFGELENK