Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> Czech Technical University

Czech Technical University

Videos

Loading the player ...

- Offer Profile

- Welcome to Intelligent and

Mobile Robotics group!

The conducted research is focused on design and development of intelligent mobile robots - self-guided vehicles. The overall goal is to develop highly robust cognitive control system for this kind of robots and to bring novel ideas into particular solutions. For central tasks are considered: sensing of the environment, sensor data processing and data understanding, all leading towards automated world model building and/or updating. The used knowledge representations of the world are designed and optimized for planning of robot activities for self-navigation in real (indoor) environments. As such system requires highly complex control and decision-making the crucial parts is always the sensing and sensor data understanding.

Product Portfolio

Research Topics

- Inertial Navigation

This section describes research results of localization methods based on inertial measurement data, provided by accelerometers and gyroscopes. Main target areas of this topic cover the accuracy research of pure inertial approaches and possibilities of inertial methods fusion with an extroceptive methods.

Robot Control Systems

This section describes results of a research and development of systems for mobile robot control.

Rescue Mission Support

The aim of this topic is overview, monitoring and decision support for operators and coordinators of rescue missions. The base components of the support system are dynamic mission map, communication framework and visualization interfaces.

Safety Critical System and Diagnostic System

The software application in transport and industry must fulfill severe safety and quality requirements. The rate of random and systematic failure is decreased by formal methods for specification and by software testing. The diagnostic system decrease the price of system maintenance and time period of failure.

Motion planning

The aim of motion planning is find an optimal collision-free path in a configuration space for one or multiple robots in order to fulfil some task (go from a start to a goal position, find an object in the space, clean the working area, etc.) Our group deals with planning algorithms for one robot (obstacle avoidance) as well as planning methods for a group of robots (coverage and exploration problems).

Mobile Robot Localization based on Range Measurements Processing

In order to effectively explore a working environment and to autonomously build a map of the environment, a robot has to localize its position. The insufficient leng-term accuracy provided by simple methods like odometry leads us to develop robust localization methods based on data fusion from several different sensors. Most methods are based on processing measuremens of distances to the surrounding obstacles, provided by ultrasonic or laser range-finders.

Map Building for Mobile Robots

Presence of a working environment map is useful for robot navigation. This section present several approaches for building various types of environment maps, designated for robot or human use.

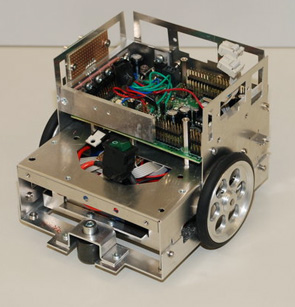

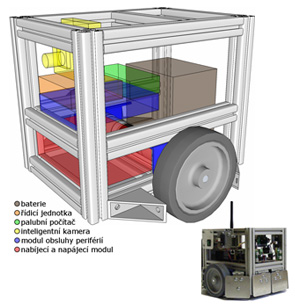

Experimental Hardware

This section describes experimantel hardware developed and used in our laboratory.

Current Projects

SyRoTek

- The aim of the SyRoTek (“System for robotic e-learning”)

project is to research, design, and develop novel methods and approaches to

building a multi-robot system for distance learning. The foreseen system

will allow its remote users to get acquainted with algorithms from areas of

modern mobile and collective robotics, artificial intelligence, control, and

many other related domains. Advanced users will be able to develop own

algorithms and monitor behaviour of these algorithms on-line during real

experiments. The proposed system reduces a development process and allows a

wide spectrum of both individuals and institutions to work with real robotic

equipment.

The main components of the robotic platform – mobile robots – are expected to move inside a restricted area, which will also contain other elements like obstacles or objects related to objectives of the actually solved task. Moreover, several sensors (infrared, sonars, cameras, etc.) will be used to gather information about the actual status of the playfield and particular objects on it. Some sensors will be placed onboard the robots, while others will be stand-alone getting global overview of the playfield status. The user will be able not only to observe gathered data using internet interface, but also control the robots in real-time. Unlike existing e-learning robotic systems developed in the world in which the user can only tele-operate robots, behaviour of the robots in the SyRoTek system can be modified, while the system allows to run own algorithms developed by the user.

MorBot

VenZeTmy

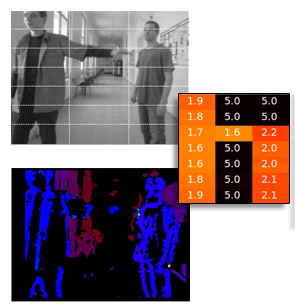

- The aim of the project is to develop a new kind of an aid

for visually impaired. The project is managed by Integrace. We are working

on the obstacle detection system. We use the stereo camera STOC for basic

obstacle detection.

The aid consists of the sensor for obstacle detection and a tactile display which is integrated to a shirt. The tactile display has 7x3 vibrating actuators. The position of an obstacle in front of the user determines which actuator is activated.

The scene (left) with two obstacles. The disparity map (right) is constructed from stereo images. The distances to objects are used to determine the activation matrix (middle).

Experimental results and demonstrators

SyRoTek Stage extension

- The video shows functionality of Stage (from Player/Stage project) extended by IMR. It now supports multiple independent views (each view can be configured separately, allowing the same functionality as a standard Stage window). Moreover each view can play a video source (both file and live stream) which can be displayed together with sensor data and other data produced by Stage. The camera calibration tool finding correct transformation between Stage scene and video is also provided. Finally, the plug-in that allows usage of Stage as a stand-alone viewer has been developed. More info can be found at: http://lynx1.felk.cvut.cz/syrotek

Autonomous Surveillance with an UAV

- This video shows an autonomous flight of a drone, which has to take pictures of target areas designated by white cards. The drone uses a navigation method presented in the article "Simple, Yet Stable Bearing Only Navigation". The method is based on image analysis and dead-reckoning. A neural network based method is used to plan the drone path in order to ensure its precise positioning over the goals. More details will be published on November 2010.

Solution of Bugtrap benchmark using RRT-Path

- The task is to move the red stick into the 'Bugtrap'. This is easier than to take it outside the bugtrap. The 'Bugtrap' is a benchmark for motion planning. The solution was obtained using modification of Rapidly Exploring Random Tree: RRT-Path.

Airport snow shoveling

- The video shows several experiments with shoveling snow by a formation of mobile robots. The path for the formation (and all its robots) is planned using Receding Horizon Control approach. In the first part of the video several simulations show ability to cope with coupling/decapling of the formation and dynamic obstacle avoidance. Then two indoor experiments show how to turn the formation in a blind road (e.g. at the end of a runway) and how to shovel a snow in an airport. The last experiment with P3AT robot shows P3AT mobile robot shoveling a pavement in a park. In the last experiment the robot is navigated by SURFnav algorithm.

Parrot AR-Drone autonomous takeoff and landing

- This video demonstrates the ability of the AR drone to takeoff and land on a moving vehicle. The ARDrone bottom camera is used to recognize a simple pattern on the moving robot and the drone either howers above or lands on the pattern.

Parrot AR-Drone autonomous flight

- This video shows a fully autonomous unmanned aerial vehicle following a short pre-learned path. The quadcopter is first manually guided around by a teleoperator. During the teleoperated flight, image processing algorithms (SURF,SIFT) recognize salient objects from the image of the on-board camera. Positions of these objects are estimated and put in a threedimensional map. In the autonomous mode, the quadcopter loads a relevant map and matches the mapped objects to the currently visible ones. In this way, the quadcopter is able to estimate it position with a precision higher than conventional GPS. Details on the navigation method are in the article: Krajnik et.al. : "Simple, Yet Stable Bearing Only Navigation", Journal of Field Robotics, Sep, 2010.

Probostov lake autonomous drive

- This video shows a fully autonomous robot following a pre-learned path. The robot is first manually guided around the lake on one kilometer long path and creates a map of objects in the path vicinity. After that, the robot traverses the path several times on its own. The robot is learned the map in early morning and traverses it repeatedly until evening. The experiment shows, that the navigation algorithm is able to deal with changing illumination throughout the day. The video is speeded up 20 times - in fact, it takes one hour to navigate the whole path. One should realize, that using GPS for this task is not feasible, because under trees, the GPS signal suffers from reflexions and occlusions and GPS precision is 10-30 meters. The visual navigation algorithm presented here achieves 0.3 m precision. Details on the navigation method are in the article: Krajnik et.al. : "Simple, Yet Stable Bearing Only Navigation", Journal of Field Robotics, Sep, 2010.

Probostov lake autonomous drive

- This is related to "Probostov lake autonomous drive" video, where a mobile robot traverses one km long path autonomously. Here, you can see robots perpective of the world, i.e. the view from robot onboard camera during learning phase. Notice, that during learning, there is a slight rain and during navigation, the weather is sunny. Details on the navigation method are in the article: Krajnik et.al. : "Simple, Yet Stable Bearing Only Navigation", Journal of Field Robotics, Sep, 2010.

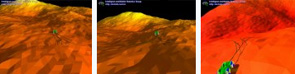

Motion planning of a simple terrain rover

- Motion planning for a simple rover on a terrain. The motion was planned using RRT algorithm and the green tree represents actual (local) plan.

Alpha Puzzle Benchmark

- Solution of Alpha Puzzle benchmark using RRT. More details about the puzzle can be found at: http://parasol.tamu.edu/

Solution of Flange Problem Using RRT

- Example of a motion planning for a 3D rigid object using Rapidly Exploring Random trees (RRT). The details about the 'Flange problem' can be found at: http://parasol.tamu.edu/

Piano Mover's Problem

- Example of a motion planning of a 3D rigid object in a 3D environment, which is known as 'The Piano Mover's problem'. Here the environment was reconstructed using laser range finder data

Reconstruction of 3D scan 3

- Process of reconstruction of 3D scan of hall. Hall was scanned by robot equiped with laser range-finder placed verticaly. Individual scans were aligned using odometry.

Reconstruction of 3D scan 2

- Process of reconstruction of 3D scan of room. Room was scanned by robot equiped with laser range-finder placed verticaly. Individual scans were aligned using odometry.

Reconstruction of 3D scan 1

- Process of reconstruction of 3D scan of hall. Hall was scanned by robot equiped with laser range-finder placed verticaly. Individual scans were aligned using odometry.

Navigation in Large Outdoor Environments

Topological mapping of a large environment

- The 'Large Map' (LaMa) framework enables to build a topological map of a (possibly large) environment. The mobile robot is able to follow a path using a simple camera vision based method. When the crossing is reached the Lama decision module determines which outgoing edge will be traversed.

Gaze controlled intelligent wheelchair

- A wheelchair is fitted by a I4Control device, which enables to control it by gaze. Algorithms of mobile robotics domain are applied to ensure user safety and comfort. Apart from directly controlling wheelchair movement direction, the user can issue orders like 'move forward 2 m' or 'turn by 45 degrees'. Moreover, destinations in a known map can be passed to the wheelchair, which takes care of safe navigation.

PeLoTe Structure Colapse Experiment

- During the mission execution some unexpected situation can occur. Previously passable corridor is blocked due to structure collapse. Rescuer reports this situation to an operator who makes modification in the map and invokes re-planning to solve the collision situation. Modifications in the map as well as refreshed path plans are then immediately distributed among all teammates and the mission can continue

PeLoTe Merlin Explore Dangerous Area

- During the mission a rescuer discovered an area classified as dangerous, which was previously seen as closed in the map. Human tele-operator makes changes in the map. New map updates are distributed to all entities. The rescuer who discovered the dangerous area than remotely controls a following Merlin robot to observe an area, which is human inaccessible. The observations made in this area are then announced to tele-operator, who makes decisions and necessary modifications of the map through its GUI.

PeLoTe PeNa Experiment

- PeNa (Personal Navigation system part of Pelote project) supporting humans to become the remote end entities in a tele-operated task. This concept extends their capabilities by navigation, assistance and sharing of data from remote knowledge bases. The core of the localization is human dead reckoning. The dead reckoning is based on a compass, Inertial Measurement Unit (IMU), a wireless step-length measurement unit and a laser. The laser data is also used for map building and localization.The accurate position of all team-members (humans and robots) on a map gives a clear view of the situation to the operator. PeNa was also presented to professional firefighters of Wuerzburg in their training facilities.

PeLoTe ER1 Experiment

- Presentation of functionality of robots ER-1, which served besides Merlin robots in PeLoTe project. Movie shows robot ER-1 performance in professional firefighters' of Wuerzburg training facilities.

PeLoTe Experiment Start

- Initialization procedure of experiment startup. Human rescuer approaches the operational area. He can see a prior map of the environment in its GUI and expects commands from mission coordinator. Mission coordinator invokes path planning module, which generates trajectories for both the human and robotic entities in a coordinated manner. Path plans are then distributed to all relevant entities and mission can start.

SLAM

- Simultaneous Localization and Mapping (SLAM) is a basic task of mobile robotics. SLAM using laser range-finder is shown in this movie. Robot moves thru environment, incrementally builds map of its environment and subsequently localizes itself in this partial map. Every new laser scan of the environment ,slightly different from previous one (difference is caused by robot's motion) is localized in context of partial map and new information is added to the map. Method point to point matching is used for localization of the scan in the map.

MTSP Hierarchical approach

- Multiple traveling salesman problem is mathematical description of the whole class of problems solved in robotics. We try to find way for group of robots to every 'city' be visited by one robot. The length of ways is objective of optimization. Self organizing Kohonen's neuron network with MinMax criterion is used in this approach. Hierarchy is made above the cities. Solution for one set of cities (with less cities) is used as initial state for bigger set´.

Outdoor navigation

- Navigation in outdoor environment is challenging task. Robot uses camera and laser range-finder to detect paths-way in the park. Robot recognizes terrain as rideable according to color and texture from camera as well as shape and reflectance from laser range-finder. Classifier is learning color of the path-way based on laser's information. Subsequently, path-ways are segmented from camera picture. Best way for the robot is find in this segmented picture and also the crossings are detected.

Multi-Robot Exploration

- A problem of exploring an unknown environment by a team of mobile robots is solved by combination of multi-agent architecture A-globe with frontier based exploration technique. The A-globe architecture allows solving the exploration problem with limited communication accessibility and with changing number of participating robots. A novel method combining A* search with harmonic potential fields is used in frontier based path planning. An improved version of the Iterative Closest Point localization algorithm has been developed to increase its speed and robustness. The whole exploration framework has been implemented and tested in both simulated and real environments.

Robotour delivery challenge

- Robotour is a competition, where fully autonomous robots have to travel approximatelly 1km long route on paved passsages in a park. The route is given to the team 10 minutes before the match. Robots have to stay on the paved ways and avoid obstacles. This video shows some basic functionalities of competing robots deployed by IMR student team. Using the SURFNav navigation system at Robotour 2008, our team won the tournament highly surpassing score of other opponents.

3D indoor mapping

- 3D shape reconstruction of the environment. Robot equipped by two laser range-finders collected range data of the laboratory. First laser range-finder was used for localization of the robot and second one was placed perpendicular to floor and measured distances to the walls and furniture. You can see raw localized data as well as some representation more suitable for robotics purposes. 3D occupancy grid is useful for sensor data fusion or path planning. Representation as surface normals is suitable for environment reconstruction for human operators. Triangulation is representation well known from computer graphics.

3D outdoor mapping

- 3D shape reconstruction of the environment. Robot equipped by two laser range-finders and camera collected range data at the university campus. Camera and odometry was used for robot localization, laser rangefinder and camera data were fused to obtain 3D dataset. Note map improvement as the robot completes the loop.