- Offer Profile

- The main scope of the Robotics

and Cognitive Systems Group is to perform and promote research in

application problems that rise in the area of robotics, computer vision,

multimodal integration, haptics, image analysis and understanding, quality

control, visual surveillance, intelligent sensory networks. The tools that

the group uses to expand the front of the science and the corresponding

research areas of interest are:

- Artificial Vision (including Machine Vision, Cognitive Vision and Robot Vision)

- Intelligent Systems (such as Fuzzy Systems and Artificial Neural Network)

- Sensor Data Fusion

- Pattern Recognition

Research topics

Cognitive Vision

- Cognitive science is the interdisciplinary study of mind

and intelligence, embracing philosophy, psychology, artificial intelligence,

neuroscience, linguistics, and anthropology. Attempts to understand the mind

and its operation go back at least to the Ancient Greeks, when philosophers

such as Plato and Aristotle tried to explain the nature of human knowledge.

The study of mind remained the province of philosophy until the nineteenth

century, when experimental psychology developed.

Cognitive science intellectual origins are in the mid-1950s when researchers in several fields began to develop theories of mind based on complex representations and computational procedures. Its organizational origins are in the mid-1970s when the Cognitive Science Society was formed and the journal Cognitive Science began. Since then, more than sixty universities in North America, Europe, Asia, and Australia have established cognitive science programs, and many others have instituted courses in cognitive science.

Visual and other kinds of images play an important role in human thinking. Pictorial representations capture visual and spatial information in a much more usable form than lengthy verbal descriptions. Computational procedures well suited to visual representations include inspecting, finding, zooming, rotating, and transforming. Such operations can be very useful for generating plans and explanations in domains to which pictorial representations apply.

In the Department of Production Management and Engineering (PME) of the Democritus University of Thrace (DUTH) in Greece, assiduous research in cognitive vision has been made. Results of this research are the construction of disparity, saliency and depth maps as also the generation of algorithms responsible for the extraction of optic flow in complex backgrounds and the estimation of motion.

Image Stabilization

- Digital image stabilization is the process that

compensates the undesired fluctuations of a frame’s position in an image

sequence. The techniques for image stabilization are consisted by two

successive units. The first one is the motion estimation unit and the next

one is the motion compensation or correction unit. During the motion

estimation phase, the global motion vector is extracted, which is composed

by two principal components, the indented camera movement and the unwanted

one. The accuracy of this estimation is important due to the fact that the

compensation unit corrects the estimated vector, which means that any

possible mistake will affect the final output.

Digital stabilization preserves the intentional camera movements, while smoothens the video output from the unwanted oscillations. Almost any acquired image sequence is affected by noise and undesired camera jitters. Depending on the application those unwanted fluctuations are caused by a rough terrain, the shaking of a hand etc. Image stabilization is a necessity, as vision plays a key role to many applications including automatic localization, mapping, and navigation. Therefore, the output of the image sequence should be free from noise, and should be smooth enough in order for useful results to be extracted. Image stabilization is application depended. In the case of a camera mounted on an active servo mechanism, the undesired oscillations are mostly the rotational ones and the stabilization is implemented by servo motors, which compensate the pan and the tilt camera movement, respectively. This technique is known as the optical stabilization. When electronic hardware is utilized the stabilization is referred as electronic stabilization. Finally, when only pure image processing techniques are adopted the stabilization is called digital image stabilization (DIS). This is the process of preserving the intended camera motion, while removing the unwanted noise and motion effects by means of digital image processing. DIS is performed in many ways, either real-time or non real-time, and as pre-process or as post-process.

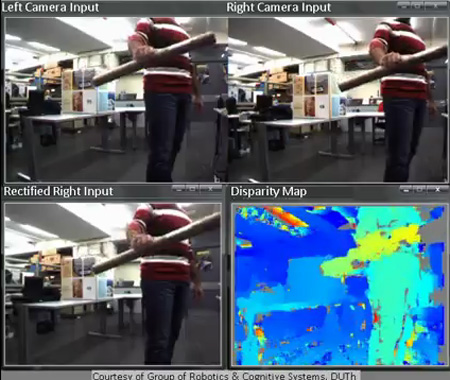

Stereo Vision

- The issue of stereo correspondence is of great importance

in the field of Machine Vision. It concerns the matching of points, or any

other primitive, between a pair of pictures of the same scene. Assuming a

calibrated stereo setup, matching points reside on corresponding horizontal

lines. The disparity is calculated as the distance of these points when one

of the two images is projected onto the other. The disparity values for all

the image points comprise the disparity map. Once the stereo correspondence

problem is solved the depth of the scenery can be estimated.

This issue is of interest in the contexts of 3D reconstruction, virtual reality, robot navigation, Simultaneous Localization and Mapping (SLAM) and many other aspects of production, security, defense, exploration and entertainment.The problem is usually addressed using software implemented hardware. On the other hand, many tasks require real-time performance without the use of a PC. As a result there are hardware implemented and optimized algorithms. The evolution of FPGAs has made them an appealing choice towards this direction.

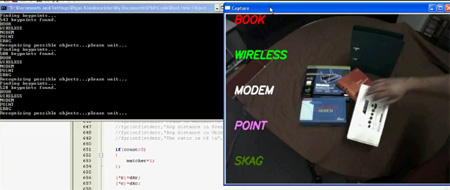

Object Recognition

- In the last decade, pattern recognition tasks have

flourished and become one of the most popular tasks in computer vision. A

wealth of research focused on building vision systems capable of recognizing

objects in cluttered environments. Generally, recognizing objects in a scene

is one of the oldest tasks in computer vision field and still constitutes

one of the most challenging. Every pattern recognition technique is directly

related with the decryption of information contained in the natural

environment. During the past few years, remarkable efforts were made to

build new vision systems capable of recognizing objects in cluttered

environments.

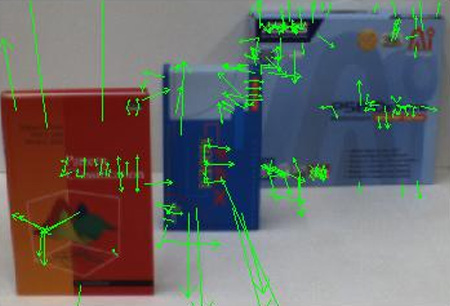

Moreover, emphasis was given to recognition systems based on appearance features with local estate. Local neighborhood data are discerned and organized using efficient detectors and descriptors respectively. The main idea behind interest location detectors is the pursuit of points or regions with unique information in a scene. These spots or areas contain data that distinguish them from others in their local neighborhood. It is apparent that, detector’s efficiency relies on its ability to locate, as many distinguishable areas as possible, in an iterative process.

In turn, a descriptor organizes the information collected from the detector in a discriminating manner. Thus, locally sampled feature descriptions are transformed into high dimensional feature vectors. In other words, parts of an object located in a scene are represented by descriptors. Putting these descriptors in logical coherence fulfills the final object representation. Finally, during the last decade, several important techniques were presented, such as SIFT (Scale Invariant Feature Transform) and SURF (Speeded-Up Robust Features).

Research within Funded Projects:

Infra

- The fundamental objective of the INFRA project is to

research and develop novel technologies for personal digital support

systems, as part of an integral and secure emergency management system to

support First Responders in crises occurring in Critical Infrastructures

under all circumstances.

The specific objectives of the project fall under the following categories:

Communications objectives, which involve the research and development of an integral and interoperable wireless communications system that will allow First Responders to have reliable means of communications as they enter subway tunnels and buildings with thick concrete walls.

First Responders objectives, which entail the research and development of a robust indoor site navigation system based on three location sensors (an inertial sensor, a wireless sensor and a video sensor), a video annotation system for First Responder PDAs, sensors for real time identification of radiation exposure and hazardous materials, and applications for gas leakage and hidden fire detection.

Standardization objectives, which includes R&D of a European level proposal for the standardization of the framework of communications and applications as proposed by INFRA.

Demonstration objectives, which consist on the demonstration of the validity of INFRA’s standards, communications and First Responder applications being developed.

DUTh is responsible for the following key tasks:- Implementation of a reliable real-time indoor mapping based on inertial sensor

- Implementation of a reliable real-time indoor mapping based on existing 802.11 Wi-Fi networks

- Visual-inertial Data fusion for indoor mapping

Acroboter

- The project aims to develop a radically new robot

locomotion technology that can effectively be used in a home and/or in a

workplace environment for manipulating small object autonomously or in close

cooperation with humans. Further more the robot could assist human occupants

of the room by following spoken directions, or by offering assistance with

their

f0 movements or exercises. This new type of mobile robot will be designed to move fast and in any direction in an indoor environment.

The whole system is divided into several sub-systems: 1. The moving platform depends on the anchor points-units placed in a raster fixed to the ceiling of the room, 2. The pendulum-like structure corresponds to the swinging unit (SU) that hangs on a wire, 3. The necessary vertical movements are provided by a winding mechanism (WM), 4. Place on the climber unit (CU), 5. The vision system (VS) comprises of four cameras installed in the four corners of the room and one mounted on the CU.DUTh is responsible for the vision system VS of the ACROBOTER which, in turn, must provide vital visual information concerning:

- the position of the platform in the 3D working space,

- the topology of possible objects/obstacles in the platform's trajectory.

The overall goal is to adequately accomplish demanding manipulation tasks. Furthermore, the VS is responsible for three tasks that affect directly the overall efficiency of the project:

- estimate the SU's pose in the room,

- the reconstruction of the 3D working environment of the platform,

- the recognition of objects found in the scene.

Rescuer

- Improvement of the Emergency Risk Management through

Secure Mobile Mechatronic Support to Bomb Disposal

The RESCUER project focuses on both (a) the development of an intelligent mechatronic Emergency Risk Management tool and (b) on the associated Information and Communication Technology. Testing will be performed in Explosive Ordnance Disposal (EOD), Improvised Explosive Device Disposal (IEDD), and Civil Protection Rescue Mission scenarios.

RESCUER outputs will include guidance for risk management, which will extend the possible range of interventions beyond current limitations.

RESCUER will include multifunctional tools, two simultaneously working robot arms with dextrous grippers, smart sensors for ordnance search and identification, for human detection and for the assessment of the environment. It will be mounted on an autonomous vehicle. Advanced information and communication facilities will lead to an improvement of emergency risk management. The detailed objectives are:- To develop an interface tool between the Emergency Risk Management Monitoring and Advising System and the existing data base systems for IEDD/EOD and Emergency Risk Management.

- To improve risk management by using new mechatronic & intelligent methods for bomb disposal and rescue operations & IT techniques for the management of rescue missions.

- To propose, develop and improve the risk management of IEDD/EOD and rescue operations through an Emergency Risk Management Monitoring and Advising System.

- To apply and combine advanced and intelligent sensing techniques for the detection of explosives, chemical, biological and radioactive materials, and human bodies.

- To develop advanced rescue planning methods and human-machine interface techniques for secure IEDD/EOD and rescue operations.

- To design, build, and test a two arm intelligent mechatronic system, called RESCUER, for secure mobile support to IEDD/EOD, rescue operations and emergency risk management.To promote the acceptance of RESCUER for improved risk management in anti-terrorist and rescue operations among civil protection authorities.

DUTh was responsible for the following key tasks:

- Implementation of a 4 d.o.f stereoscopic head.

- Real-Time, wireless transmission and playback of video streamed from the stereoscopic vision system.

- Real-Time teleoperation of the 4-d.o.f stereoscopic head.

- Real-Time electronic image stabilization.

View Finder

- Vision and Chemiresistor Equipped Web-connected Finding

Robots

In the event of an emergency due to a fire or other crisis, a necessary but time consuming pre-requisite, that could delay the real rescue operation, is to establish whether the ground can be entered safely by human emergency workers.

The objective of the VIEW-FINDER project is to develop robots which have the primary task of gathering data. The robots are equipped with sensors that detect the presence of chemicals and, in parallel, image data is collected and forwarded to an advanced base station.DUTh is providing the project with state-of-the-art stereo algorithms in order to be used for:

- Autonomous Robot Navigation

- Obstacle Avoidance#

- 3D Scene Map Generation

DUTh is also providing the project with stereo video signal compression and wireless streaming.