Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> The University of Texas at Austin

The University of Texas at Austin

- Offer Profile

- I am the founder and director

of the Learning Agents Research Group (LARG) within the Artificial

Intelligence Laboratory in the Department of Computer Science at The

University of Texas at Austin.

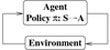

My main research interest in AI is understanding how we can best create complete intelligent agents. I consider adaptation, interaction, and embodiment to be essential capabilites of such agents. Thus, my research focuses mainly on machine learning, multiagent systems, and robotics. To me, the most exciting research topics are those inspired by challenging real-world problems. I believe that complete successful research includes both precise, novel algorithms and fully implemented and rigorously evaluated applications.

Product Portfolio

Research

- My application domains have included robot soccer,

autonomous bidding agents, autonomous vehicles, autonomic computing, and

social agents.

My long-term research goal is to create complete,

robust, autonomous agents that can learn to interact with other intelligent

agents in a wide range of complex, dynamic environments.

Current projects

Reinforcement Learning

- A large part of the lab's research focus is on developing new reinforcement learning algorithms with a particular focus on scaling up to large-scale applications.

Multiagent Systems

- One main theme of the lab is the study of interactions among independent autonomous agents (including robots), be they teammates, adversaries, or neither. Some of our research on this topic contributes to and makes use of game theory.

Robot Soccer

- One of the main application domains used throughout the lab is robot soccer, both in simulation and on real robots. We have won multiple RoboCup championships.

Trading Agents

- Another main application domain is autonomous trading agents, including supply chain management, ad auctions, and mechanism design. We have won multiple Trading Agent Competitions.

Autonomous Traffic Management

- We introduced a novel, efficient multiagent mechanism for future autonomous vehicles to navigate intersections.

Autonomous Driving

- We have a full-size autonomous vehicle that we use to study autonomous driving in the real world.

Teaching an Agent Manually via Evaluative Reinforcement (TAMER)

- The TAMER project seeks to create agents which can be effectively taught behaviors by lay people using positive and negative feedback signals (akin to "shaping" by reward and punishment in animal training).

Robot Vision

- We develop algorithms suitable for real-time visual sensing of the physical world on mobile robots.

Past projects

Transfer Learning

- We have developed algorithms from transfering knowledge from a previously learned task to a similar, but different, new learning task. We focus particularly on reinforcement learning tasks.

Learned Robot Walking

- We enabled an Aibo robot to learn to walk faster than was previously possible.

General Game Playing

- We participated successfully in the first few general game playing competitions.

Autonomic Computing

- We are developing machine learning approaches for computer systems applications.

Social Agents

- We finished in 2nd place in the 2007 RoboCup@Home competition.

Developmental Robotics

- We have developed methods for robots to autonomously discover models of their own sensor and actuators.

Predictive State Representations

- We have contributed to the literature on representating an agent's state entirely via predictions of its future sensations as a function of its possible actions. Thus it does not need to reason explicitly about objects.

Layered Learning

- My Ph.D. thesis introduced a general hierarchical machine learning paradigm by which complex tasks can be learned via several interacting learned layers.

Planning

- My first research as a Ph.D. student was within the area of classical AI planning. Some of our current research falls in the area of modern planning and scheduling