Navigation : EXPO21XX > AUTOMATION 21XX >

H05: Universities and Research in Robotics

> Chemnitz University of Technology

Chemnitz University of Technology

- Offer Profile

- Professorship Artificial Intelligence

Associated with the Bernstein Center for Computational Neuroscience Berlin

Professor: Prof. Dr. Fred Hamker

My research group persues a model-driven approach to explore visual perception and cognition. At present, research in visual perception has accumulated numerous experimental data.

Product Portfolio

Professorship Artificial Intelligence

- My research group persues a model-driven approach to explore visual perception and cognition. At present, research in visual perception has accumulated numerous experimental data. Since the underlying processes have turned out being very complex and present data eludes a simple interpretation, more research is necessary to elaborate a theoretical basis in form of neurocomputational models. The models we are interested in, try to capture the temporal dynamics of the essential mechanisms and processes in the brain primarily on the level of a population code. Our research goals are three-fold. i) We want to link-up different experimental observations in a single model to work out common, essential mechanisms. ii) We test experimental predictions of the model either in our group or through collaborations. We expect that models adressing higher functions as part of different brain areas will gain more and more impact in guiding research. iii) The validity of the models is also tested by observing their performance on real world tasks, such as object/category recognition. We are confident that this neurobiological approach provides a high potential for future computer vision solutions.

Peri-saccadic space perception

- When we look at a scene we feel we perceive the visual

world in all its detail and richness. What leads us to the experience of

visual space and how do we integrate perception across eye movements? Even

prior to saccade onset, studies using briefly flashed stimuli, revealed

changes in perception such as a compression of the visual space. However,

neither the neural mechanisms responsible for 'compression', nor its role in

perception have been revealed. We have developed a neurocomputational model

to further guide research in this area.

The model (see Hamker et al., PLOS Comp. Biol, in press) consists of two layers. Layer 1 encodes the presented stimulus position as an active population in a cortical coordinate system considering RF size and magnification. This population gets distorted by a oculomotor or attentive feedback signal. The pooled distorted population is represented in Layer 2 and used to determine the perceived position.

Attention

- Vision provides a rich collection of information about

our environment. The difficulty in vision arises, since this information is

not obvious in the image, it has to be actively constructed. Whereas earlier

algorithms have favored a bottom-up approach, which converts the image into

an internal representation of the world, more recent algorithms search for

alternatives and develop frameworks which make use of top-down connections.

Following the latter line of research, we have outlined that perception is

an active process: planing stages in the frontal areas modify perception in

early stages to construct the needed information from the environmental

input. This research resulted in a novel approach termed ''population-based

inference''. Predictions of the model are experimentally tested. A

large-scale model has been demonstrated on natural scene perception.

Outline of the minimal set of interacting brain areas. Our model areas are restricted to elementary but typical processes and do not replicate all features in these areas. The arrows indicate known anatomical connections between the areas, which are relevant to the model. The area that sends feedforward input into the model is not explicitly modeled. The labels in the boxes denote the implemented areas. (b) Sketch of the simulated model areas. Each box represents a population of cells. The formation of those populations is a temporal dynamical process. Bottom-up (driving) connections are indicated by a yellow arrow and top-down (modulating) connections are shown as a red arrow. The two boxes in V4 and other areas indicate that we simulate two dimensions (e.g. ''color'' and ''form'') in parallel. The FEF pools across dimensions.

Category/Object recognition

- Based on our earlier research that investigated the

concept of perception as active pattern generation, we aim to combine

attention and object recognition in a single interconnected network. We will

demonstrate the performance on object recognition in natural scenes and

provide a significant step towards the understanding of vision as a

constructive process. Learning feedforward and feedback weights will result

in model cells which encode with increasing hierarchy larger proportions of

the visual field and more complex stimulus properties. Feedback allows to

resolve ambiguities and to reveal visual details. This project is funded by

the DFG.

A model of the ventral pathway and the frontal eye field for attention and object recognition. From the image, different feature maps are obtained (color and orientation) and each feature at each location is represented with its conspicuity by a population code. Learned feedforward W and feedback weights A connect the areas with each other.

Cognitive control of visual perception

- Our earlier research has

formalized perception by an active, top-down directed inference process in

which a target template will be learned and maintained by areas involved in

task coordination. This learning of appropriate templates and its activation

in time, termed as the cognitive guidance of vision, will be achieved in a

reward-based scenario. In this respect, we aim to develop a model of how

prefrontal cortex and subcortical structures interact to generate target

templates in time and thus guide the vision process. This research project

is supported by the DFG.

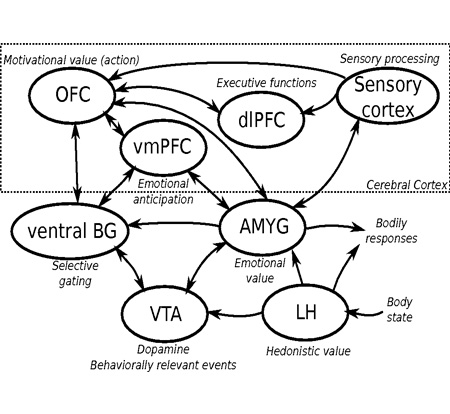

A) Functional sketch of the model. We propose that solutions for visual perception must flexibly consider prior knowledge. Prior knowledge can either guide vision towards objects of interest or determine the aspects in a visual scene that remain in memory. In both cases a prospective signal (which we also call a target template) is used to enhance the representation of the relevant input. In a visual search situation this top-down signal guides vision through top-down connections which have to be learned. In other cases this signal determines the relevant aspects of a scene which have to be bound towards the present task.

B) Outline of how this model for attention, object recognition and category learning is implemented in the brain. The visual (red) part implements match detection and visual selection. The visual-cognitive (blue) part ensures the learning and activation of the correct template in time. For simplicity, some areas in the ventral pathway are considered at a comparable level and described by a single map (e.g. V1/V2). The connections among the areas are typically bi-directional.

Masking and Conscious Perception

- We examine dynamical aspects of conscious visual perception related to briefly presented stimuli and their possible neural underpinnings. The concept of the formation of an object as central to visual perception has been strongly supported from findings from backward masking. We particularly suggest that consciousness is related to the formation of closed thalamo-cortical loops mediated by the basal ganglia.

ANNarchy (Artificial Neural Networks architect)

ANNarchy is a simulator for distributed mean-rate or spiking neural networks. The core of the library is written in C++ and provides an interface in Python. The current development version is 3.0 and will be soon released under the GNU GPL.

ANNarchy is made to simulate distributed and biologically plausible neural networks, which means that neurons have only access to local information through their connections to other neurons but not to global information, like the state of the entire network or connections of other neurons. In principle, biologically unplausible mechanisms like back-propagation and winner-take-all are not well-suited for this simulator.

Architecture

ANNarchy is specifically oriented towards the learning capabilities of the neural networks. The main object, annarNetwork, is a collection of interconnected heterogeneous populations of artificial neurons (annarPopulation). Each population comprises a set of similar artificial neurons, annarNeuron, whose activation is ruled by various differential equations. This activation of a neuron depends on the one of other neurons of the networks from which it receives connections (through synapses, annarWeight).

The connections received by a neuron are stored in different arrays, depending on the type that was assigned to them: realistic neurons do not integrate equally all their inputs, but differentially process them depending on their neurotransmitter type (AMPA, NMDA, GABA, dopamine...), position on the dendritic tree (proximal/distal) or even region of origin (cortical columns do not treat thalamic inputs the same way as long-distance cortico-cortical connections). Each type of connection can be integrated separately to modify the activation of a neuron.

This typed organization of afferent connections also allows to easily apply to them different learning rules (Hebbian, Anti-Hebbian, dopamine-modulated, STDP...) A learning rule is defined in the annarLearningRule class and can be reused in different networks.

A class representing the external world, annarWorld, allows the network to interact with its environment in an input/output manner (retrieving input images, performing actions...)

Simulation of neuronal agents in virtual reality

- The goal of this research project is to simulate

integrative cognitive models of the human brain as developed in other

projects to investigate the performance of cognitive agents interacting with

their environment in virtual reality. Each agent has human-like appearance,

properties and behavior. Thus, this project establishes a transfer of

brain-like algorithms to technical systems.

System

The neuronal agents and their virtual environment (VR) are simulated on a distributed and specialized device. The agents have all main abilities of a human, they are capable to execute simple actions like moving or jumping, to move their eyes and their heads and to show emotional facial expressions. Agents learn their behavior autonomously based on their actions and their sensory consequences in the environment. For this purpose, the VR-engine contains a rudimentary action- and physic-engine. Small movements (like stretching the arm) are animated by the VR-engine, while the neuronal model rather controls high-level action choices like grasping a certain object.

To investigate the interactions of the neuronal agents with human users, the world will include user-controlled avatars. The persons will be able to receive sensory information by appropriate VR-interfaces, for example visual information will be provided by a projection system. The users will also be able to interact with the environment, the necessary movement information will be gathered by tracking their face and their limbs. This face tracking is especially used to detect the emotions of the user to investigate the emotional communication between humans and neuronal agents.

Technically, the device consists of several sub parts: a virtual reality engine, a neurosim cluster simulating the agents brain and an immersive projections system to map the human users to avatars. The cluster itself will be able to simulate several neuronal models in parallel which allows us to use multi-agent setups.The cluster will consist of the NVidia CUDA acceleration cards (hardware layer) and the neuronal simulator framework ANNarchy (software layer).

- The goal of this research project is to simulate

integrative cognitive models of the human brain as developed in other

projects to investigate the performance of cognitive agents interacting with

their environment in virtual reality. Each agent has human-like appearance,

properties and behavior. Thus, this project establishes a transfer of

brain-like algorithms to technical systems.

Emotions

- While classical theories systematically opposed emotion and cognition, suggesting that emotions perturbed the normal functioning of the rational thought, recent progress in neuroscience highlights on the contrary that emotional processes are at the core of cognitive processes, directing attention to emotionally-relevant stimuli, favoring the memorization of external events, valuating the association between an action and its con- sequences, biasing decision making by allowing to compare the motivational value of different goals and, more generally, guiding behavior towards fulfilling the needs of the organism.